when i run mobilenetv1.0 on rasp Pi 3B+ by rpc, inference time is 120 ms. However, i use cross compilers to make .so running on rasp , the inference time is 280ms, so what happend?

it is my mistake , it is same between rpc and loacl

Ok, now i find unexpected result about the difference rusult between pi run locally and RPC,

Here is the code,

import os

import zipfile

import tvm

import mxnet as mx

import cv2, time

import numpy as np

import argparse

from nnvm import compiler

from nnvm.frontend import from_mxnet

from tvm import relay

from tvm.contrib.download import download

from tvm.contrib import graph_runtime, util

from mxnet.model import load_checkpoint

from tvm import rpc

test_image = "1.jpg"

dshape = (1, 3, 240, 320)

dtype = "float32"

local_demo = False

if local_demo:

target = "llvm"

else:

target = tvm.target.arm_cpu('rasp3b')

sym, arg_params, aux_params = load_checkpoint('facedetect', 0)

parser = argparse.ArgumentParser()

parser.add_argument("-f", "--frontend", help="Frontend for compilation, nnvm or relay",

type=str, default="relay")

args = parser.parse_args()

if args.frontend == "relay":

net, params = relay.frontend.from_mxnet(sym, {"data": dshape}, arg_params=arg_params, aux_params=aux_params)

with relay.build_config(opt_level=2):

graph, lib, params = relay.build(net, target, params=params)

elif args.frontend == "nnvm":

net, params = from_mxnet(sym, arg_params, aux_params)

with compiler.build_config(opt_level=3):

graph, lib, params = compiler.build(net, target, {"data": dshape}, params=params)

else:

parser.print_help()

parser.exit()

lib.export_library('model/facedetect.so', tvm.contrib.cc.create_shared, cc='/home/wanghao/program/arm_new/bin/arm-linux-gnueabihf-g++')

with open('model/facedetect.json', "w") as fo:

fo.write(graph)

with open('model/facedetect.params', "wb") as fo:

fo.write(relay.save_param_dict(params))

def process_image(image):

rect = cv2.resize(image, (dshape[3], dshape[2]))

rect = cv2.cvtColor(rect, cv2.COLOR_BGR2RGB).astype(np.float32)

rect -= np.array([104, 123, 117])

rect = rect.transpose((2, 0, 1))

img_data = np.expand_dims(rect, axis=0)

return img_data

def draw_box(img, out, thresh=0.5):

for det in out:

cid = int(det[0])

if cid >= 0:

score = det[1]

print(score)

if score > thresh:

scales = [img.shape[1], img.shape[0]] * 2

x1, y1, x2, y2 = (np.array(det[2:6])*scales).astype(np.int)

cv2.rectangle(img, (x1, y1), (x2, y2), (0, 0, 255))

text = class_names[cid]

cv2.putText(img, '{:s} {:.3f}'.format(text, score), (x1, y1 - 2), cv2.FONT_ITALIC, 1, (0, 0, 255))

if local_demo:

ctx = tvm.cpu()

m = graph_runtime.create(graph, lib, ctx)

else:

host = '192.168.1.114'

port = 9090

remote = rpc.connect(host, port)

tmp = util.tempdir()

lib_fname = tmp.relpath('net.tar')

lib.export_library(lib_fname)

remote.upload(lib_fname)

rlib = remote.load_module('net.tar')

ctx = remote.cpu()

m = graph_runtime.create(graph, rlib, ctx)

m.set_input(**params)

class_names = ["face",]

cap = cv2.VideoCapture(0)

while True:

ret, frame = cap.read()

img_data = process_image(frame)

m.set_input('data', tvm.nd.array(img_data.astype(dtype)))

t1 = time.time()

m.run()

print('fps: ', 1 / (time.time() - t1))

tvm_output = m.get_output(0).asnumpy()[0]

draw_box(frame, tvm_output, 0.8)

cv2.imshow('hello', frame)

if cv2.waitKey(1) == 27:

break

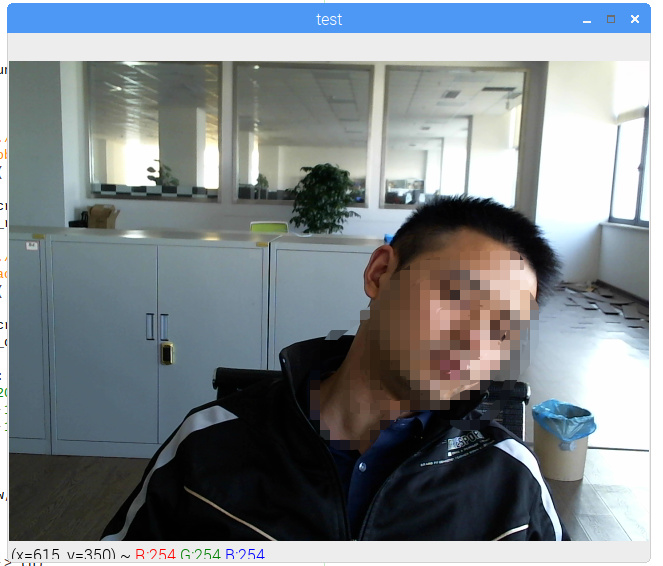

the result running on pi locally,

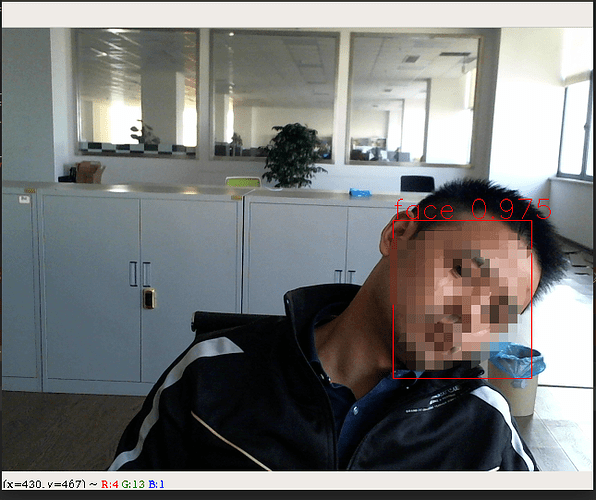

and the result on RPC,

yes, on pi local detect result face probability is about 0.2, but you can see on RPC the face probability is 0.9, maybe problem is tvm.contrib.cc.create_shared, i dont konw

Did you use the docker to do it?