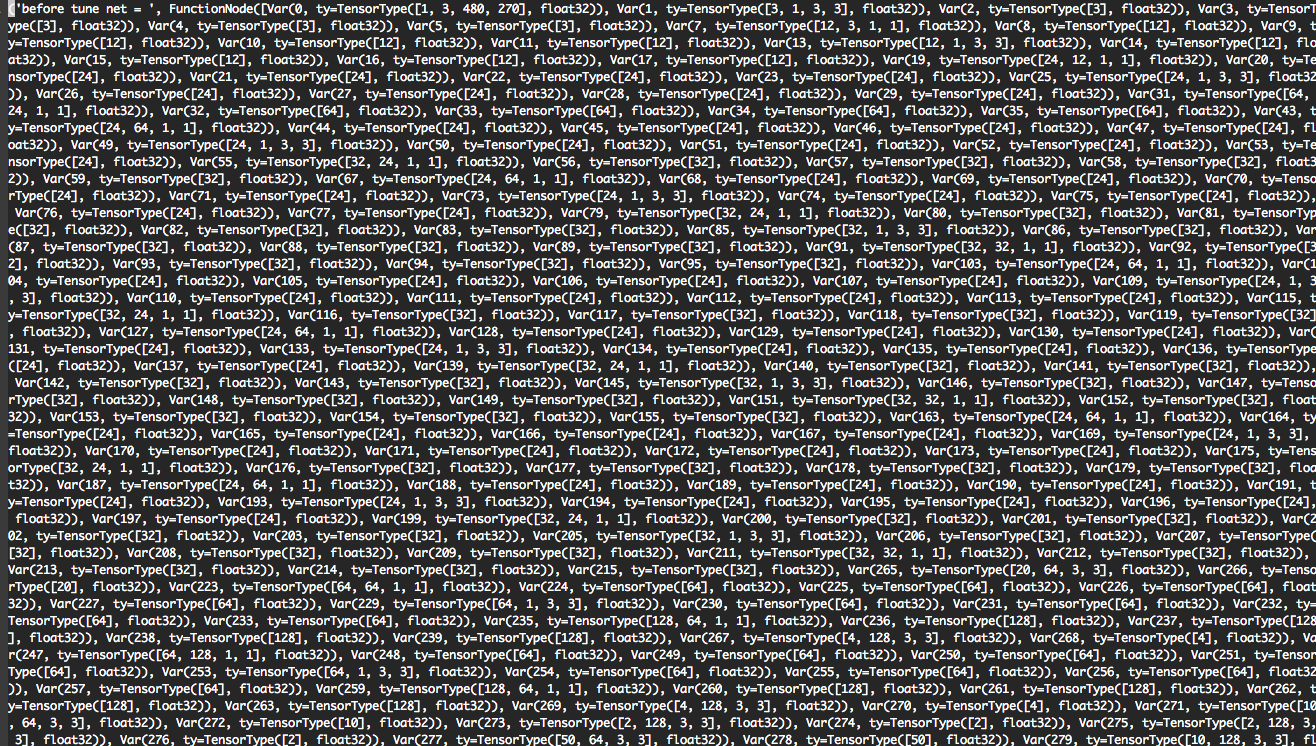

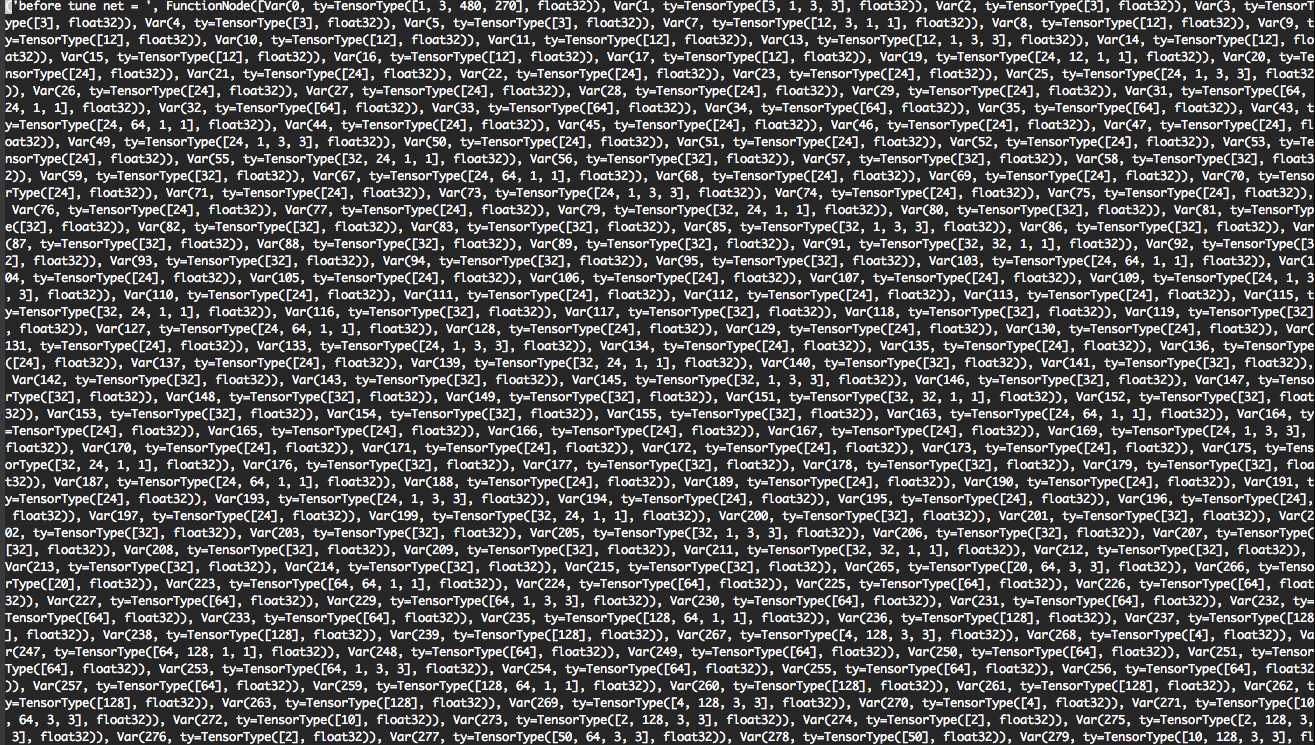

Whether autotvm support quantized model for x86 ?I want to Auto-tuning a quantized model like in the tutorials,code like this

quantize

net_quant = relay.quantize.quantize(net, params)

lanuch task

tasks = autotvm.task.extract_from_program(net_quant,target=target, params=params,ops=(relay.op.nn.conv2d,))

load log file

with autotvm.apply_history_best(log_file):

with relay.build_config(opt_level=3):

graph_quant, lib_quant, params_quant = relay.build_module.build(net_quant, target=target, params=params)

error:

KeyError: ‘tile_c’ ,Error during compile func

please help me