Hi @thierry , In the fifth demo 2D Convolution Optimization in your website, I knew the data reshape methods in VTA, but how does the VTA manage the data, when to load and when to store?

Thanks!

The matrix multiplication blocking tutorial should give you a good idea on how this works: https://docs.tvm.ai/vta/tutorials/matrix_multiply_opt.html#sphx-glr-vta-tutorials-matrix-multiply-opt-py

The key is to tile your computation and insert the compute_at schedule primitives at the right places. Let me know if you’d like me to elaborate further.

Thank you very much! @thierry I think your thought include three steps:

(1) spilt output channels and feature map, then reorder

(2) “compute_at” schedule

(3) spilt input channels and feature map, then reorder

Is that right?

My questions:

(1) why did you put the “ic.outer” at that position?

(2) Are the formats of data feature map, kernel and output feature map the same? Is the “Blocking the Computation” formats the same? Are all 2D Convolution Optimization method the same as the fifth example?

(3)when the input feature map is 2828128, kernel is 12833128(stride=1) and the output feature map is 2828*128, what’s the tiling parameters? how can I decide the tiling parameters for different layers?

(4) How is the wgt organized in off-chip memory(DRAM) in the “2D Convolution Optimization” demo?

Thanks!

Does it mean that I can set any tile parameters and loop order as long as the size of BRAM buffer and the one- cycle-calculation scale of GEMM and ALU are not exceeded?

Hi @D_Shang,

(1) ic is our reduction axis – by bringing it out, it means we can keep the accumulation sums in the FPGA while we block the computation. You could of course try different axis reordering schemes and compare the outcomes.

(2) By format, are you referring to memory tiling? It doesn’t have to be the same. We arrange the layout of these tensors to match the shape of VTA’s tensor computation hardware intrinsic. Blocking in our example defined the access patterns, but not the way the data is laid out.

(3) One simple rule for deciding of the tiling parameters is how to sub-divide your tensor into chunks that can fit into local chip’s SRAM. AutoTVM should help with that and we’re working on a release of AutoTVM support for VTA which will automate the process of deriving those parameters for you!

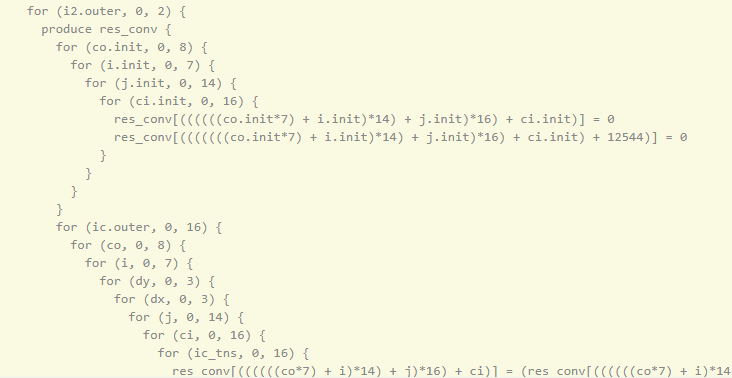

(4) The wgt tensor is simply stored in (N, IC, H, W, n, ic) format as shown below:

kernel_shape = (out_channels // env.BLOCK_OUT,

in_channels // env.BLOCK_IN,

kernel_h,

kernel_w,

env.BLOCK_OUT,

env.BLOCK_IN)```And for your question from yesterday, indeed BRAM is the main constraint. Our support for AutoTVM will make this derivation a whole lot easier than figuring out a schedule via trial and error.

Hi Thierry,

Thanks a lot for your sharing about VTA.

From the first turorial vta_get_start.py, I see:

s[A_buf].set_scope(env.acc_scope)

and

s[A_buf].pragma(s[A_buf].op.axis[0], env.dma_copy)

My question is: need we allocate A_buf in SRAM firstly, then do DMA copy? If yes, would you help point out where the code is?

In the TVM code that you pasted, we are simply setting the scope of A_buf to be stored in the accumulator memory (a.k.a. register file), and using the env.dma_copy pragma as a way to indicate to the TVM compiler that data will be copied to that buffer via a 2d dma copy.

For details on that 2d_dma copy works, you’ll need to look at vta/python/vta/ir_pass.py