Thank you @MarisaKirisame

Let say I have following function, and I am wondering which python object is the graph representation?

My understanding is that module is consists of functions, and module is the graph representation.

def example():

shape = (1, 10)

dtype = 'float32'

tp = relay.TensorType(shape, dtype)

x = relay.var("x", tp)

y = relay.var("y", tp)

out = relay.var("o", tp)

z = relay.add(x, y)

func = relay.Function([out], z)

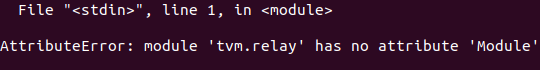

mod = relay.Module({"main": func})

The outputs are here:

>>>z

CallNode(Op(add), [Var(x, ty=TensorType([1, 10], float32)), Var(y, ty=TensorType([1, 10], float32))], (nullptr), [])

>>>func

FunctionNode([Var(o, ty=TensorType([1, 10], float32))], (nullptr), CallNode(Op(add), [Var(x, ty=TensorType([1, 10], float32)), Var(y, ty=TensorType([1, 10], float32))], (nullptr), []), [], (nullptr))

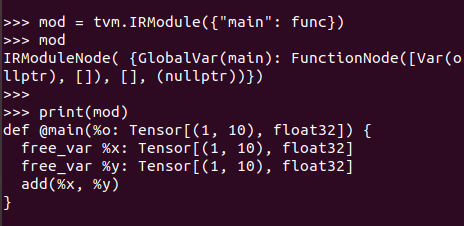

>>>mod

IRModuleNode( {GlobalVar(main): FunctionNode([Var(o, ty=TensorType([1, 10], float32))], (nullptr), CallNode(Op(add), [Var(x, ty=TensorType([1, 10], float32)), Var(y, ty=TensorType([1, 10], float32))], (nullptr), []), [], (nullptr))})