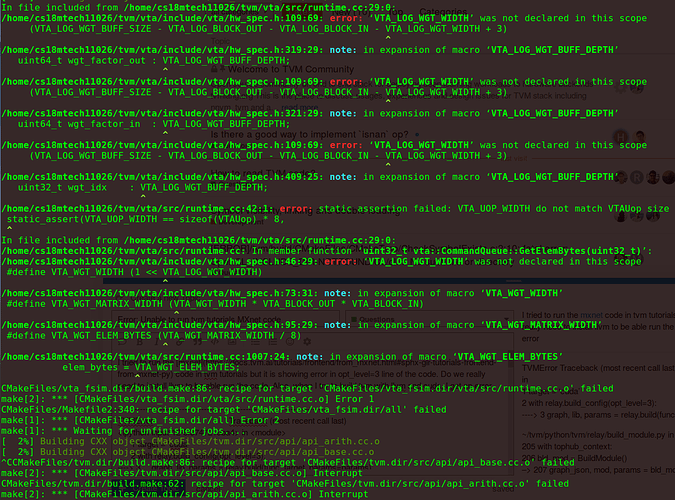

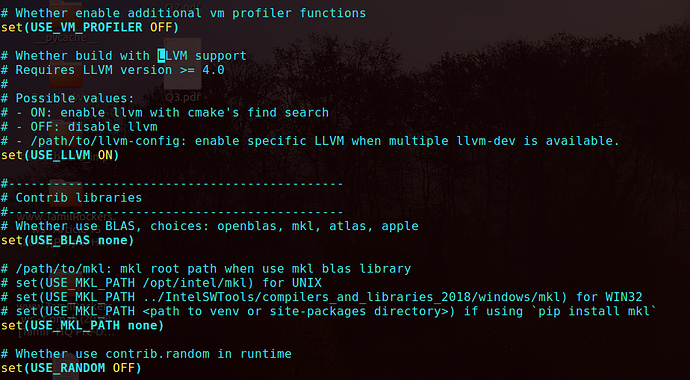

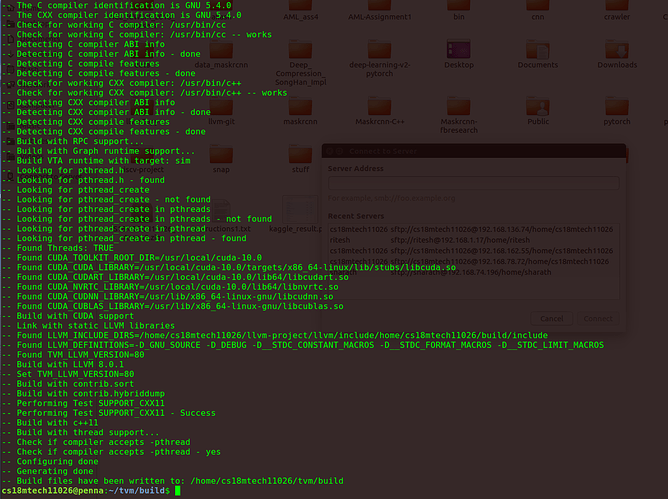

I tried to run the mxnet code in tvm tutorials but it is showing error in opt_level=3 line of the code. Do we really need to install llvm to be able run the code. Also, when I try to build tvm with llvm and cuda, I get an error

TVMError Traceback (most recent call last)

in

1 target = ‘cuda’

2 with relay.build_config(opt_level=3):

----> 3 graph, lib, params = relay.build(func, target, params=params)

~/tvm/python/tvm/relay/build_module.py in build(mod, target, target_host, params)

205 with tophub_context:

206 bld_mod = BuildModule()

–> 207 graph_json, mod, params = bld_mod.build(func, target, target_host, params)

208 return graph_json, mod, params

209

~/tvm/python/tvm/relay/build_module.py in build(self, func, target, target_host, params)

106 self._set_params(params)

107 # Build the function

–> 108 self._build(func, target, target_host)

109 # Get artifacts

110 graph_json = self.get_json()

~/tvm/python/tvm/_ffi/_ctypes/function.py in call(self, *args)

208 self.handle, values, tcodes, ctypes.c_int(num_args),

209 ctypes.byref(ret_val), ctypes.byref(ret_tcode)) != 0:

–> 210 raise get_last_ffi_error()

211 _ = temp_args

212 _ = args

TVMError: Traceback (most recent call last):

[bt] (8) /home/cs18mtech11026/tvm/build/libtvm.so(tvm::relay::ConstantFolder::ConstEvaluate(tvm::relay::Expr)+0x557) [0x7fc76f822a07]

[bt] (7) /home/cs18mtech11026/tvm/build/libtvm.so(+0xa59fd8) [0x7fc76f937fd8]

[bt] (6) /home/cs18mtech11026/tvm/build/libtvm.so(tvm::relay::ExprFunctor<tvm::relay::Value (tvm::relay::Expr const&)>::VisitExpr(tvm::relay::Expr const&)+0x161) [0x7fc76f9418c1]

[bt] (5) /home/cs18mtech11026/tvm/build/libtvm.so(std::_Function_handler<tvm::relay::Value (tvm::NodeRef const&, tvm::relay::ExprFunctor<tvm::relay::Value (tvm::relay::Expr const&)>), tvm::relay::ExprFunctor<tvm::relay::Value (tvm::relay::Expr const&)>::InitVTable()::{lambda(tvm::NodeRef const&, tvm::relay::ExprFunctor<tvm::relay::Value (tvm::relay::Expr const&)>)#6}>::_M_invoke(std::Any_data const&, tvm::NodeRef const&, tvm::relay::ExprFunctor<tvm::relay::Value (tvm::relay::Expr const&)>*&&)+0x34) [0x7fc76f938d14]

[bt] (4) /home/cs18mtech11026/tvm/build/libtvm.so(tvm::relay::Interpreter::VisitExpr(tvm::relay::CallNode const*)+0x59b) [0x7fc76f94bdab]

[bt] (3) /home/cs18mtech11026/tvm/build/libtvm.so(tvm::relay::Interpreter::Invoke(tvm::relay::Closure const&, tvm::Array<tvm::relay::Value, void> const&)+0x8d) [0x7fc76f94a06d]

[bt] (2) /home/cs18mtech11026/tvm/build/libtvm.so(tvm::relay::Interpreter::InvokePrimitiveOp(tvm::relay::Function const&, tvm::Array<tvm::relay::Value, void> const&)+0xa3f) [0x7fc76f948d5f]

[bt] (1) /home/cs18mtech11026/tvm/build/libtvm.so(tvm::relay::CompileEngineImpl::JIT(tvm::relay::CCacheKey const&)+0x1b3) [0x7fc76f90e933]

[bt] (0) /home/cs18mtech11026/tvm/build/libtvm.so(+0xb80ddb) [0x7fc76fa5eddb]

File “/home/cs18mtech11026/tvm/python/tvm/_ffi/_ctypes/function.py”, line 72, in cfun

rv = local_pyfunc(*pyargs)

File “/home/cs18mtech11026/tvm/python/tvm/relay/backend/_backend.py”, line 88, in build

return _build.build(funcs, target=target, target_host=target_host)

File “/home/cs18mtech11026/tvm/python/tvm/build_module.py”, line 627, in build

mhost = codegen.build_module(fhost_all, str(target_host))

File “/home/cs18mtech11026/tvm/python/tvm/codegen.py”, line 36, in build_module

return _Build(lowered_func, target)

File “/home/cs18mtech11026/tvm/python/tvm/_ffi/_ctypes/function.py”, line 210, in call

raise get_last_ffi_error()

[bt] (2) /home/cs18mtech11026/tvm/build/libtvm.so(TVMFuncCall+0x61) [0x7fc76fa638e1]

[bt] (1) /home/cs18mtech11026/tvm/build/libtvm.so(+0x4273ee) [0x7fc76f3053ee]

[bt] (0) /home/cs18mtech11026/tvm/build/libtvm.so(tvm::codegen::Build(tvm::Array<tvm::LoweredFunc, void> const&, std::__cxx11::basic_string<char, std::char_traits, std::allocator > const&)+0xcb4) [0x7fc76f41aed4]

File “/home/cs18mtech11026/tvm/src/codegen/codegen.cc”, line 46

TVMError: Check failed: bf != nullptr: Target llvm is not enabled