I tried to follow tune_relay_x86.html tutorial to tune Tensorflow mobilenet_v1 models for x86.

tune_kernels run successfully

but tune_graph failed

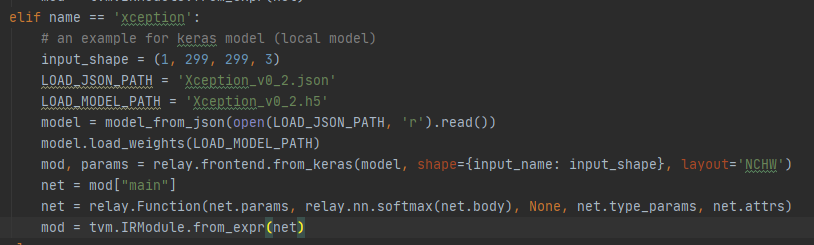

I added the following to read tensorflow .pb file and create tvm module

elif re.match('mobilenet.+\.pb', name):

import tensorflow as tf

input_name = "input"

input_shape = (1, 224, 224, 3)

target_layout='NCHW'

with tf.Session() as sess:

print("load graph")

with tf.gfile.GFile(name,'rb') as f:

graph_def = tf.GraphDef()

graph_def.ParseFromString(f.read())

print(name)

print({input_name: input_shape})

mod, params = relay.frontend.from_tensorflow(graph_def, shape={input_name: input_shape}, layout=target_layout)

Also I changed input tensor name in the tutorial code from “data” to “input” in several places

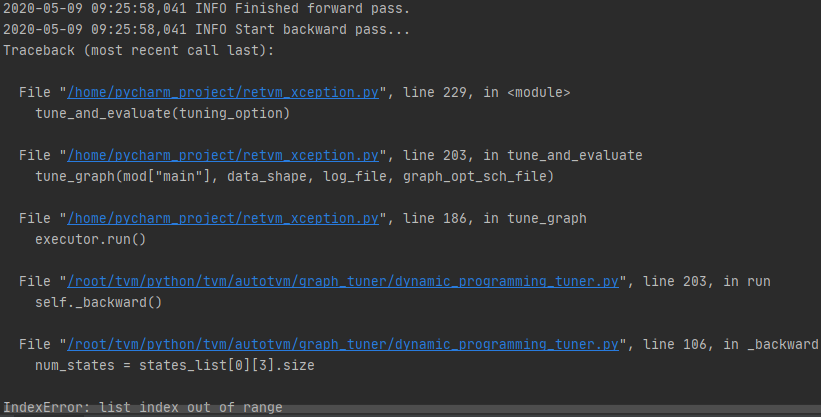

tune_graph failed with the following error

2019-07-23 02:15:18,107 INFO Start to benchmark layout transformation...

2019-07-23 02:15:18,107 INFO Benchmarking layout transformation successful.

2019-07-23 02:15:18,107 INFO Start to run dynamic programming algorithm...

2019-07-23 02:15:18,107 INFO Start forward pass...

2019-07-23 02:15:18,107 INFO Finished forward pass.

2019-07-23 02:15:18,108 INFO Start backward pass...

[]

Traceback (most recent call last):

File "./tune_relay_x86.py", line 246, in <module>

tune_and_evaluate(tuning_option)

File "./tune_relay_x86.py", line 220, in tune_and_evaluate

tune_graph(mod["main"], data_shape, log_file, graph_opt_sch_file)

File "./tune_relay_x86.py", line 203, in tune_graph

executor.run()

File "/usr/local/lib/python3.5/dist-packages/tvm-0.6.dev0-py3.5-linux-x86_64.egg/tvm/autotvm/graph_tuner/dynamic_programming_tuner.py", line 189, in run

self._backward()

File "/usr/local/lib/python3.5/dist-packages/tvm-0.6.dev0-py3.5-linux-x86_64.egg/tvm/autotvm/graph_tuner/dynamic_programming_tuner.py", line 94, in _backward

num_states = states_list[0][3].size

IndexError: list index out of range

Tensofrflow model (.pb) can be downloaded from http://download.tensorflow.org/models/mobilenet_v1_2018_02_22/mobilenet_v1_1.0_224.tgz

Full log

# ./tune_relay_x86.py

Extract tasks...

2019-07-23 02:12:15.091847: I tensorflow/core/platform/cpu_feature_guard.cc:141] Your CPU supports instructions that this TensorFlow binary was not compiled to use: AVX2 FMA

2019-07-23 02:12:15.095005: I tensorflow/core/platform/profile_utils/cpu_utils.cc:94] CPU Frequency: 3192000000 Hz

2019-07-23 02:12:15.095443: I tensorflow/compiler/xla/service/service.cc:150] XLA service 0x3ef4cc0 executing computations on platform Host. Devices:

2019-07-23 02:12:15.095459: I tensorflow/compiler/xla/service/service.cc:158] StreamExecutor device (0): <undefined>, <undefined>

load graph

mobilenet_v1_1.0_224_frozen.pb

{'input': (1, 224, 224, 3)}

Tuning...

[Task 2/20] Current/Best: 91.33/ 91.33 GFLOPS | Progress: (12/12) | 6.15 s Done.

[Task 3/20] Current/Best: 0.70/ 93.65 GFLOPS | Progress: (12/12) | 13.77 s Done.

[Task 4/20] Current/Best: 26.90/ 41.27 GFLOPS | Progress: (12/12) | 5.53 s Done.

[Task 5/20] Current/Best: 11.20/ 47.68 GFLOPS | Progress: (12/12) | 11.84 s Done.

[Task 6/20] Current/Best: 28.71/ 145.49 GFLOPS | Progress: (12/12) | 6.91 s Done.

[Task 7/20] Current/Best: 1.47/ 8.46 GFLOPS | Progress: (12/12) | 14.10 s Done.

[Task 8/20] Current/Best: 18.79/ 138.28 GFLOPS | Progress: (12/12) | 5.68 s Done.

[Task 9/20] Current/Best: 9.75/ 37.83 GFLOPS | Progress: (12/12) | 7.87 s Done.

[Task 10/20] Current/Best: 59.23/ 105.86 GFLOPS | Progress: (12/12) | 6.28 s Done.

[Task 11/20] Current/Best: 26.49/ 26.49 GFLOPS | Progress: (12/12) | 14.54 s Done.

[Task 12/20] Current/Best: 29.02/ 117.50 GFLOPS | Progress: (12/12) | 6.34 s Done.

[Task 13/20] Current/Best: 2.38/ 16.02 GFLOPS | Progress: (12/12) | 8.94 s Done.

[Task 14/20] Current/Best: 156.38/ 307.21 GFLOPS | Progress: (12/12) | 5.25 s Done.

[Task 15/20] Current/Best: 5.54/ 13.82 GFLOPS | Progress: (12/12) | 12.78 s Done.

[Task 16/20] Current/Best: 32.73/ 53.82 GFLOPS | Progress: (12/12) | 7.36 s Done.

[Task 17/20] Current/Best: 10.54/ 15.59 GFLOPS | Progress: (12/12) | 14.32 s Done.

[Task 18/20] Current/Best: 38.66/ 59.79 GFLOPS | Progress: (12/12) | 6.14 s Done.

[Task 19/20] Current/Best: 5.06/ 19.41 GFLOPS | Progress: (12/12) | 5.41 s Done.

[Task 20/20] Current/Best: 53.91/ 86.86 GFLOPS | Progress: (12/12) | 5.69 s Done.

input tensor: {'input': (1, 224, 224, 3)}

WARNING:autotvm:Cannot find config for target=llvm -device=tracing, workload=('conv2d', (1, 3, 225, 225, 'float32'), (32, 3, 3, 3, 'float32'), (2, 2), (0, 0), (1, 1), 'NCHW', 'float32'). A fallback configuration is used, which may bring great performance regression.

WARNING:autotvm:Cannot find config for target=llvm -device=tracing, workload=('depthwise_conv2d_nchw', (1, 32, 114, 114, 'float32'), (32, 1, 3, 3, 'float32'), (1, 1), (0, 0), (1, 1), 'float32'). A fallback configuration is used, which may bring great performance regression.

WARNING:autotvm:Cannot find config for target=llvm -device=tracing, workload=('conv2d', (1, 32, 112, 112, 'float32'), (64, 32, 1, 1, 'float32'), (1, 1), (0, 0), (1, 1), 'NCHW', 'float32'). A fallback configuration is used, which may bring great performance regression.

WARNING:autotvm:Cannot find config for target=llvm -device=tracing, workload=('depthwise_conv2d_nchw', (1, 64, 113, 113, 'float32'), (64, 1, 3, 3, 'float32'), (2, 2), (0, 0), (1, 1), 'float32'). A fallback configuration is used, which may bring great performance regression.

WARNING:autotvm:Cannot find config for target=llvm -device=tracing, workload=('conv2d', (1, 64, 56, 56, 'float32'), (128, 64, 1, 1, 'float32'), (1, 1), (0, 0), (1, 1), 'NCHW', 'float32'). A fallback configuration is used, which may bring great performance regression.

WARNING:autotvm:Cannot find config for target=llvm -device=tracing, workload=('depthwise_conv2d_nchw', (1, 128, 58, 58, 'float32'), (128, 1, 3, 3, 'float32'), (1, 1), (0, 0), (1, 1), 'float32'). A fallback configuration is used, which may bring great performance regression.

WARNING:autotvm:Cannot find config for target=llvm -device=tracing, workload=('conv2d', (1, 128, 56, 56, 'float32'), (128, 128, 1, 1, 'float32'), (1, 1), (0, 0), (1, 1), 'NCHW', 'float32'). A fallback configuration is used, which may bring great performance regression.

WARNING:autotvm:Cannot find config for target=llvm -device=tracing, workload=('depthwise_conv2d_nchw', (1, 128, 57, 57, 'float32'), (128, 1, 3, 3, 'float32'), (2, 2), (0, 0), (1, 1), 'float32'). A fallback configuration is used, which may bring great performance regression.

WARNING:autotvm:Cannot find config for target=llvm -device=tracing, workload=('conv2d', (1, 128, 28, 28, 'float32'), (256, 128, 1, 1, 'float32'), (1, 1), (0, 0), (1, 1), 'NCHW', 'float32'). A fallback configuration is used, which may bring great performance regression.

WARNING:autotvm:Cannot find config for target=llvm -device=tracing, workload=('depthwise_conv2d_nchw', (1, 256, 30, 30, 'float32'), (256, 1, 3, 3, 'float32'), (1, 1), (0, 0), (1, 1), 'float32'). A fallback configuration is used, which may bring great performance regression.

WARNING:autotvm:Cannot find config for target=llvm -device=tracing, workload=('conv2d', (1, 256, 28, 28, 'float32'), (256, 256, 1, 1, 'float32'), (1, 1), (0, 0), (1, 1), 'NCHW', 'float32'). A fallback configuration is used, which may bring great performance regression.

WARNING:autotvm:Cannot find config for target=llvm -device=tracing, workload=('depthwise_conv2d_nchw', (1, 256, 29, 29, 'float32'), (256, 1, 3, 3, 'float32'), (2, 2), (0, 0), (1, 1), 'float32'). A fallback configuration is used, which may bring great performance regression.

WARNING:autotvm:Cannot find config for target=llvm -device=tracing, workload=('conv2d', (1, 256, 14, 14, 'float32'), (512, 256, 1, 1, 'float32'), (1, 1), (0, 0), (1, 1), 'NCHW', 'float32'). A fallback configuration is used, which may bring great performance regression.

WARNING:autotvm:Cannot find config for target=llvm -device=tracing, workload=('depthwise_conv2d_nchw', (1, 512, 16, 16, 'float32'), (512, 1, 3, 3, 'float32'), (1, 1), (0, 0), (1, 1), 'float32'). A fallback configuration is used, which may bring great performance regression.

WARNING:autotvm:Cannot find config for target=llvm -device=tracing, workload=('conv2d', (1, 512, 14, 14, 'float32'), (512, 512, 1, 1, 'float32'), (1, 1), (0, 0), (1, 1), 'NCHW', 'float32'). A fallback configuration is used, which may bring great performance regression.

WARNING:autotvm:Cannot find config for target=llvm -device=tracing, workload=('depthwise_conv2d_nchw', (1, 512, 15, 15, 'float32'), (512, 1, 3, 3, 'float32'), (2, 2), (0, 0), (1, 1), 'float32'). A fallback configuration is used, which may bring great performance regression.

WARNING:autotvm:Cannot find config for target=llvm -device=tracing, workload=('conv2d', (1, 512, 7, 7, 'float32'), (1024, 512, 1, 1, 'float32'), (1, 1), (0, 0), (1, 1), 'NCHW', 'float32'). A fallback configuration is used, which may bring great performance regression.

WARNING:autotvm:Cannot find config for target=llvm -device=tracing, workload=('depthwise_conv2d_nchw', (1, 1024, 9, 9, 'float32'), (1024, 1, 3, 3, 'float32'), (1, 1), (0, 0), (1, 1), 'float32'). A fallback configuration is used, which may bring great performance regression.

WARNING:autotvm:Cannot find config for target=llvm -device=tracing, workload=('conv2d', (1, 1024, 7, 7, 'float32'), (1024, 1024, 1, 1, 'float32'), (1, 1), (0, 0), (1, 1), 'NCHW', 'float32'). A fallback configuration is used, which may bring great performance regression.

WARNING:autotvm:Cannot find config for target=llvm -device=tracing, workload=('conv2d', (1, 1024, 1, 1, 'float32'), (1001, 1024, 1, 1, 'float32'), (1, 1), (0, 0), (1, 1), 'NCHW', 'float32'). A fallback configuration is used, which may bring great performance regression.

2019-07-23 02:15:18,107 INFO Start to benchmark layout transformation...

2019-07-23 02:15:18,107 INFO Benchmarking layout transformation successful.

2019-07-23 02:15:18,107 INFO Start to run dynamic programming algorithm...

2019-07-23 02:15:18,107 INFO Start forward pass...

2019-07-23 02:15:18,107 INFO Finished forward pass.

2019-07-23 02:15:18,108 INFO Start backward pass...

[]

Traceback (most recent call last):

File "./tune_relay_x86.py", line 246, in <module>

tune_and_evaluate(tuning_option)

File "./tune_relay_x86.py", line 220, in tune_and_evaluate

tune_graph(mod["main"], data_shape, log_file, graph_opt_sch_file)

File "./tune_relay_x86.py", line 203, in tune_graph

executor.run()

File "/usr/local/lib/python3.5/dist-packages/tvm-0.6.dev0-py3.5-linux-x86_64.egg/tvm/autotvm/graph_tuner/dynamic_programming_tuner.py", line 189, in run

self._backward()

File "/usr/local/lib/python3.5/dist-packages/tvm-0.6.dev0-py3.5-linux-x86_64.egg/tvm/autotvm/graph_tuner/dynamic_programming_tuner.py", line 94, in _backward

num_states = states_list[0][3].size

IndexError: list index out of range