Error Message:

tvm/src/runtime/module.cc:58: Check failed: f != nullptr Loader of (module.loadfile_so) is not presented.

terminate called after throwing an instance of 'dmlc::Error'

what(): [16:49:05] tvm/src/runtime/module.cc:58: Check failed: f != nullptr Loader of (module.loadfile_so) is not presented.

Reproducing step:

- Get the pretrained resnet18 model and dump the necessary files: graph json, parameters and library file

# -*- coding: utf-8 -*-

import mxnet

import nnvm

import tvm

import numpy as np

import os

from mxnet.gluon.model_zoo.vision import get_model

from PIL import Image

import matplotlib.pylab as plt

project_root = r'/home/xxx/data/tvm_demo'

block = get_model('resnet18_v1', pretrained=True)

synset_name = os.path.join(project_root, 'imagenet1000_clsid_to_human.txt')

img_name = os.path.join(project_root, 'cat.png')

with open(synset_name) as f:

synset = eval(f.read())

image = Image.open(img_name).resize((224, 224))

plt.imshow(image)

# plt.show()

def transform_image(image):

image = np.array(image) - np.array([123., 117., 104.])

image /= np.array([58.395, 57.12, 57.375])

image = image.transpose((2, 0, 1))

image = image[np.newaxis, :]

return image

x = transform_image(image)

print('x', x.shape)

sym, params = nnvm.frontend.from_mxnet(block)

sym = nnvm.sym.softmax(sym)

import nnvm.compiler

target = 'llvm'

shape_dict = {'data': x.shape}

graph, lib, params = nnvm.compiler.build(sym, target, shape_dict, params=params)

from tvm.contrib import util

# dumping model files

print("Dumping model files...")

lib_path = os.path.join(project_root, 'resnet18_deploy.so')

lib.export_library(lib_path)

graph_json_path = os.path.join(project_root, 'resnet18.json')

with open(graph_json_path, 'w') as fo:

fo.write(graph.json())

param_path = os.path.join(project_root, 'resnet18.params')

with open(param_path, 'wb') as fo:

fo.write(nnvm.compiler.save_param_dict(params))

from tvm.contrib import graph_runtime

ctx = tvm.cpu(0)

dtype = 'float32'

m = graph_runtime.create(graph, lib, ctx)

# set inputs

data_x = tvm.nd.array(x.astype(dtype))

cat_file = os.path.join(project_root, 'cat.bin')

data_x.asnumpy().tofile(cat_file)

m.set_input('data', data_x)

m.set_input(**params)

# execute

m.run()

# get outputs

tvm_output = m.get_output(0, tvm.nd.empty((1000,), dtype))

top1 = np.argmax(tvm_output.asnumpy())

print('TVM prediction top-1:', top1, synset[top1])

The above script works well

-

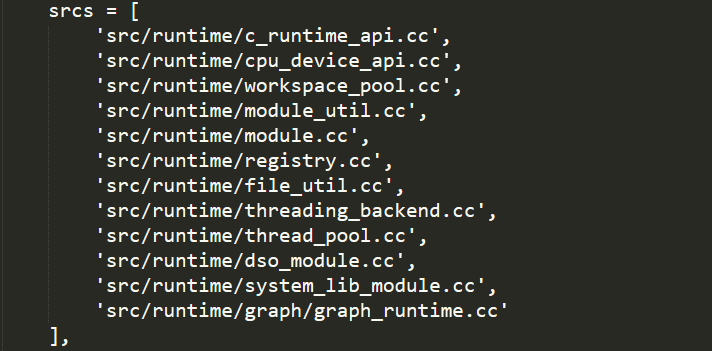

Build tvm as a static library(using bazel like build system) and get libtvm.a

-

Test cpp inference using code from this page and get the error above.

Could you tell me how to fix this problem or am I doing anything wrong???