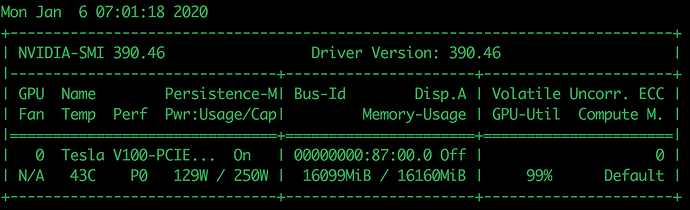

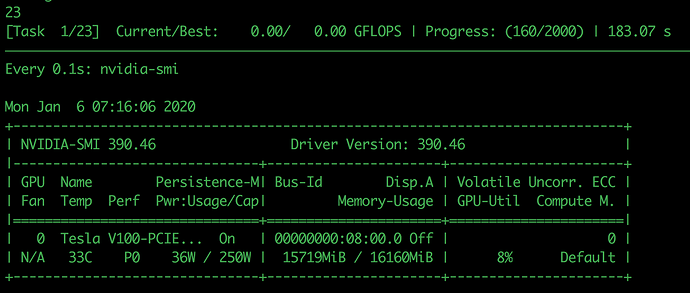

Here I compared the two logs of the 20 tune tasks of mobilent, two lines for each.

The first line is the 680fps one , and the second line is my 530 fps model.

{“v”: 0.1, “r”: [[7.296479683567062e-06], 0, 31.188103199005127, 1557114554.860901], “i”:│

{“v”: 0.1, “r”: [[6.209928335255671e-06], 0, 1.7554395198822021, 1578469121.1661108], "i

{“v”: 0.1, “r”: [[7.25566608118034e-06], 0, 24.90612006187439, 1557116969.1149082], “i”: │

{“v”: 0.1, “r”: [[3.957661777224805e-06], 0, 1.8577919006347656, 1578471887.4135346], "i

{“v”: 0.1, “r”: [[1.8337903959294006e-05], 0, 19.064162254333496, 1557119485.236], “i”: [│

{“v”: 0.1, “r”: [[9.10231216983461e-06], 0, 1.9765150547027588, 1578474854.435663], “i”:

{“v”: 0.1, “r”: [[1.6040891496989785e-05], 0, 16.555699586868286, 1557120860.9099872], "i│

{“v”: 0.1, “r”: [[4.405155762965572e-06], 0, 1.5967164039611816, 1578477002.8818524], "i

{“v”: 0.1, “r”: [[1.3899782663896583e-05], 0, 14.676212310791016, 1557123288.6902747], "i│

{“v”: 0.1, “r”: [[1.049456236086878e-05], 0, 3.158353090286255, 1578479213.0481741], “i”

{“v”: 0.1, “r”: [[8.9185654084282e-06], 0, 5.638719081878662, 1557124607.3493454], “i”: [│

{“v”: 0.1, “r”: [[3.9061464433796365e-06], 0, 1.9471542835235596, 1578481321.9651315], "

{“v”: 0.1, “r”: [[2.3905133306402747e-05], 0, 9.28469204902649, 1557125807.656742], “i”: │

{“v”: 0.1, “r”: [[1.8082496841155234e-05], 0, 3.263965368270874, 1578483993.1352448], "i

{“v”: 0.1, “r”: [[4.46257793120303e-06], 0, 5.401535511016846, 1557127366.481157], “i”: [│

{“v”: 0.1, “r”: [[3.6464536667874836e-06], 0, 1.8057799339294434, 1578487042.5014699], "

{“v”: 0.1, “r”: [[1.577759098217875e-05], 0, 18.750241994857788, 1557128877.2554607], "i"│

{“v”: 0.1, “r”: [[1.154055362727663e-05], 0, 2.2011630535125732, 1578490298.6760223], "i

{“v”: 0.1, “r”: [[4.082864761074439e-06], 0, 35.93831777572632, 1557130264.4687445], “i”:│

{“v”: 0.1, “r”: [[3.6962382330306295e-06], 0, 1.8576269149780273, 1578493364.439681], "i

{“v”: 0.1, “r”: [[2.864121934161341e-05], 0, 24.57156205177307, 1557131686.2528434], “i”:│

{“v”: 0.1, “r”: [[2.1775893125783536e-05], 0, 2.5706865787506104, 1578495158.0991685], "

{“v”: 0.1, “r”: [[3.5151498093213187e-06], 0, 27.85609459877014, 1557133048.0614216], "i"│

{“v”: 0.1, “r”: [[3.7069885571309425e-06], 0, 1.8315861225128174, 1578497518.9582584], "

{“v”: 0.1, “r”: [[2.0601218188258213e-05], 0, 3.117086887359619, 1557134090.3906138], "i"│

{“v”: 0.1, “r”: [[1.5957815344293542e-05], 0, 2.5050199031829834, 1578499825.8923466], "

{“v”: 0.1, “r”: [[3.189113317964178e-06], 0, 33.99994683265686, 1557135397.6238716], “i”:│

{“v”: 0.1, “r”: [[3.594054648184421e-06], 0, 2.2073705196380615, 1578502196.904582], “i”

{“v”: 0.1, “r”: [[3.773763388494878e-05], 0, 36.667195320129395, 1557136513.0227191], "i"│

{“v”: 0.1, “r”: [[3.099785244774477e-05], 0, 1.8263132572174072, 1578504357.696405], “i”

{“v”: 0.1, “r”: [[3.2446967265672527e-06], 0, 10.421549797058105, 1557137769.7692177], "i│

{“v”: 0.1, “r”: [[3.6159243494874748e-06], 0, 1.870417594909668, 1578506905.0080385], "i

{“v”: 0.1, “r”: [[4.1641094791666666e-05], 0, 18.9304940700531, 1557139387.259979], “i”: │

{“v”: 0.1, “r”: [[2.4386994165694284e-05], 0, 2.4521265029907227, 1578509300.884637], "i

{“v”: 0.1, “r”: [[3.166289999868194e-06], 0, 22.750754833221436, 1557140479.9633937], "i"│

{“v”: 0.1, “r”: [[3.6789659826638027e-06], 0, 2.034290313720703, 1578510777.7544754], "i

{“v”: 0.1, “r”: [[8.745910212919524e-05], 0, 27.56096053123474, 1557141756.825347], “i”: │

{“v”: 0.1, “r”: [[5.1079029651425985e-05], 0, 1.908388614654541, 1578512517.3867476], "i

{“v”: 0.1, “r”: [[3.8376435901534526e-05], 0, 8.293994665145874, 1557143148.589255], “i”:│

{“v”: 0.1, “r”: [[2.2977878777589136e-05], 0, 8.308621644973755, 1578514626.8704207], "i