Hi folks,

Just a FYI for folks, we’ve been working a bit on the CPU backend for CNNs lately, and I think we’ve got some pretty solid improvements, compared to our previous baselines. This leverages a bunch of code from the current master repo and others, the key modifications have been:

a) implementing various ops in NCHWc format, so we can use it for the entire graph without unnecessary __layout_transform__ ops being inserted in the layout transform passes,

b) AutoTVM’ing the NCHWc implementation

c) Adding a fast NCHWc implementation of F(4x4, 3x3) convolutions.

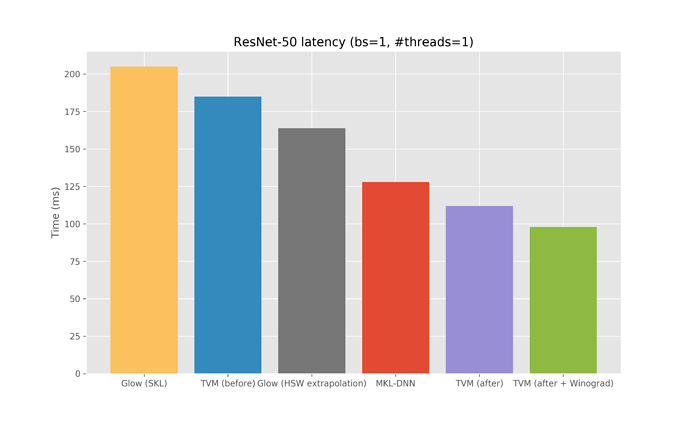

We end up getting about a 25% improvement over MKL-DNN (via MXNet) in the single-threaded, batch-size 1 case for ResNet-50 on a GCE Intel Skylake, which AFAIK would make TVM the current state of the art on that platform? Note there’s zero platform-specific code here in the implementation (no use of tensorize or other intrinsics, just autotvm on the pure schedules).

We’ll work on preparing some PRs, unless other folks have objections. I think it’d be useful to keep supporting both NCHW and NCHWc layout for our major CPU backends. The main parts if you’re curious are at: