I can probably give more in depth review, but here’s some suggestions with a quick look:

IDisposable - On classes such as Module or NDArray, I would implement the IDisposable interface to be consistent with dotnet, allowing usage with the using statement.

~Module()

{

/* If Dispose was not called before, clean up in the finalizer */

Dispose();

}

public void Dispose()

{

/* Tell gc to not run finalizer.

Saves this object from potentially being moved to higher gc generations */

GC.SuppressFinalize(this)

if (!UIntPtr.Zero.Equals(moduleHandle))

{

/* ... */

}

}

I would even suggest a step further and implement the disposable pattern, possibly as a base class (ex TVMDisposable), and overriding the “protected virtual void Dispose(bool disposing)” shown in the previous link.

Exceptions - Don’t be shy about throwing exceptions. They provide valuable information and hard for developers to ignore :). For example in Runtime.cs, the line: graphJsonString = Utils.ReadStringFromFile(runtimeParam.graphJsonPath); (Utils.ReadStringFromFile returns null) should throw an exception to caller as not being able to read that is indeed something “exceptional”. I would also throw in cases where you have things like if (!isInstantiated) { Console.WriteLine("Not instantiated yet!"); return; }

For the “C” functions that return error codes, I would suggest throwing on those, possibly with an Exception subclass. Maybe “public class TVMException : Exception” and subclassing for individual exceptions? Then adding a helper method like:

internal static ThrowIfError(int tvmErrorCode, string message)

{

switch(tvmErrorCode)

{

case TVMErrorCode.SomethingBadHappened:

throw new TvmSomethingBadException(message);

break;

case ...: /* all other exceptions */

}

}

Then usage like this where you do a p/invoke:

ThrowIfError(TVMFuncFree(ptr));

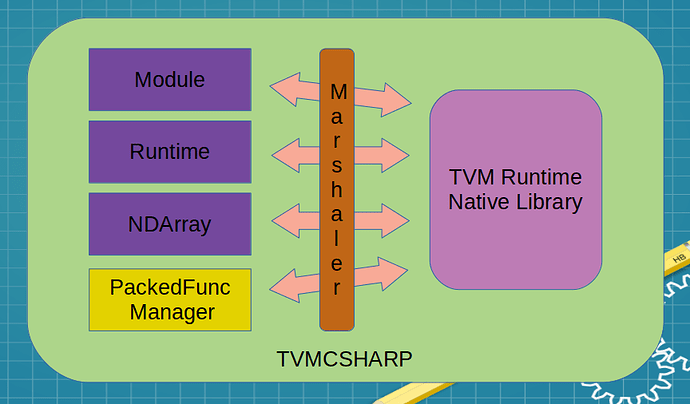

Native Library There is a public const string libName = "tvmlibs/libtvm_runtime.so"; On Windows this DLL would be called tvm_runtime.dll. With dotnet core, if you just specify “tvm_runtime”, on linux, dotnet will automatically search for “libtvm_runtime.so”, in the hosts library search paths (and LD_LIBRARY_PATHS) and on windows dotnet will automatically search for “tvm_runtime.dll” according to Windows’ library path resolution (current directory, then everything in PATH). I’m not sure if this the behavior you were looking for or not, but food for thought.

Conventions Probably my most trivial suggestion :). I would have some convention for private class level variables to easily distinguish them from local function variables. It makes parsing the code with your eyes a bit easier. In the project’s C++ code they use an underscore postifx “myVariable_”. In dotnet, its common for class level variables to have an underscore prefix, like “_myVariable”. There’s other conventions, but my suggestion is to have one.

I may have more suggestions, but I’m hungry  . I know your code is early in implementation and some of these suggestions might be something you already planned, but I’ve taken an interest as we do a lot of dotnet at work and this will be useful.

. I know your code is early in implementation and some of these suggestions might be something you already planned, but I’ve taken an interest as we do a lot of dotnet at work and this will be useful.