when I use the nnvm to build the mobilenet_1_0_224_tf.h5 model, error occurs:

raise NotImplementedError(‘relu6 not implemented’)

NotImplementedError: relu6 not implemented

PS: ResNet50 could be successfully build, the frontend is Keras

when I use the nnvm to build the mobilenet_1_0_224_tf.h5 model, error occurs:

raise NotImplementedError(‘relu6 not implemented’)

NotImplementedError: relu6 not implemented

PS: ResNet50 could be successfully build, the frontend is Keras

You can see the Keras->CoreML converters, ReLu6 is divided into a few ops. It is very easy to implement.

I am sorry for not mentioning the backend, I use tensorflow as the backend, not CoreML.

Yes, I know your problem. I just say that you can implement it like Keras->CoreML converters. Let me show the code of Keras->CoreML converters.

if non_linearity == 'RELU6':

# No direct support of RELU with max-activation value - use negate and

# clip layers

relu_output_name = output_name + '_relu'

builder.add_activation(layer, 'RELU', input_name, relu_output_name)

# negate it

neg_output_name = relu_output_name + '_neg'

builder.add_activation(layer+'__neg__', 'LINEAR', relu_output_name,

neg_output_name,[-1.0, 0])

# apply threshold

clip_output_name = relu_output_name + '_clip'

builder.add_unary(layer+'__clip__', neg_output_name, clip_output_name,

'threshold', alpha = -6.0)

# negate it back

builder.add_activation(layer+'_neg2', 'LINEAR', clip_output_name,

output_name,[-1.0, 0])

return

Keras->CoreML Converters divide ReLU6 into some parts, which is all available by NNVM.

thanks very much , it really helpful. I will try this solution and thanks again

I am sorry to bother you again. my pipeline of the usage of the model is as follows:

keras_mobilenet=keras.applications.mobilenet.MobileNet(input_shape=(224,224,3), include_top=True, weights=‘imagenet’,classes=1000)

keras_mobilenet.load_weights(‘mobilenet_1_0_224_tf.h5’)

sym, params = nnvm.frontend.from_keras(keras_mobilenet)

the relu6 error occured in 3. your solution is divide the relu6 into some parts. On one hand the CoreML converters is not supported in ubuntu system. On the other hand, I do not know how and where to divide the relu6. could you explain it in detail .

thanks very much.

ReLU6 is supported in the latest Keras frontend.

https://github.com/dmlc/nnvm/commit/bd4f3ba

Can you update your code and give it a try again?

I have try this solution ,however another problem occured.

File “/usr/local/lib/python2.7/dist-packages/nnvm-0.8.0-py2.7.egg/nnvm/compiler/graph_util.py”, line 31, in infer_shape

graph = graph.apply(“InferShape”)

File “/usr/local/lib/python2.7/dist-packages/nnvm-0.8.0-py2.7.egg/nnvm/graph.py”, line 234, in apply

check_call(_LIB.NNGraphApplyPasses(self.handle, npass, cpass, ctypes.byref(ghandle)))

File “/usr/local/lib/python2.7/dist-packages/nnvm-0.8.0-py2.7.egg/nnvm/_base.py”, line 75, in check_call

raise NNVMError(py_str(_LIB.NNGetLastError()))

nnvm._base.NNVMError: Error in operator pad27: [16:21:43] /home/zhoukun/FrameWork/nnvm/src/top/nn/nn.cc:576: Check failed: param.pad_width.ndim() == dshape.ndim() (4 vs. 3)

target = 'llvm’

shape_dict = {‘data’: (1, 3, 224, 224)}

with nnvm.compiler.build_config(opt_level=2):

graph, lib, params = nnvm.compiler.build(sym, target, shape_dict, params=params)

the error occurs in the 4th code

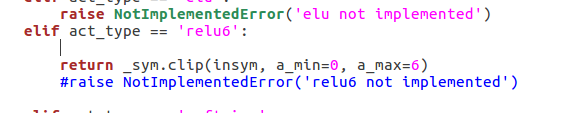

ReLu6 could be divided into ReLU -> LINEAR(-1.0) -> Threshold(alpha=-6.0) ->LINEAR(-1.0)

For the Threshold, you could implement it like this: insym = _sym.clip(insym, a_min=alpha, a_max=np.finfo(‘float32’).max). LINEAR / ReLU I think it is easy.

I am sorry, could you explain this in detail, I am not familiar with this. thanks.

@k-zhou08 your implementation should also work. I could explain CoreML’s method. Firstly, CoreML make everything be ReLU(i.e. all <0 be 0 and positive integers be the same, I use NUM to represent), then it uses LINEAR(-1.0), that is become[-NUM, 0], then use Threshold(alpha=-6.0), which equals max(-6.0, x), that is if x < -6.0, we use -6.0, if x >= -6.0, we use x. So [-NUM, 0] will became [-NUM, 0] (Every number >= -6.0), then we use LINEAR(-1.0) to become [0, NUM] (Every number <= 6.0), which just ReLU6.

For _sym.clip(insym, a_min=0, a_max=6) just work the same. Everything < 0, will be 0. Everything > 6, will be 6. Pls see: http://nnvm.tvmlang.org/top.html#nnvm.symbol.clip

I mean I used the _sym.clip(insym, a_min=0, a_max=6) function, another problem occurred.

target = 'llvm’

shape_dict = {‘data’: (1, 3, 224, 224)}

with nnvm.compiler.build_config(opt_level=2):

graph, lib, params = nnvm.compiler.build(sym, target, shape_dict, params=params)

when I use the nnvm.compiler.build(sym, target, shape_dict, params=params) function, I returns this :

File “/usr/local/lib/python2.7/dist-packages/nnvm-0.8.0-py2.7.egg/nnvm/compiler/graph_util.py”, line 31, in infer_shape

graph = graph.apply(“InferShape”)

File “/usr/local/lib/python2.7/dist-packages/nnvm-0.8.0-py2.7.egg/nnvm/graph.py”, line 234, in apply

check_call(_LIB.NNGraphApplyPasses(self.handle, npass, cpass, ctypes.byref(ghandle)))

File “/usr/local/lib/python2.7/dist-packages/nnvm-0.8.0-py2.7.egg/nnvm/_base.py”, line 75, in check_call

raise NNVMError(py_str(_LIB.NNGetLastError()))

nnvm._base.NNVMError: Error in operator pad27: [16:21:43] /home/zhoukun/FrameWork/nnvm/src/top/nn/nn.cc:576: Check failed: param.pad_width.ndim() == dshape.ndim() (4 vs. 3)

This should be nothing with ReLU6. Seems that about convolution / pooling ops with padding parameter. You could dig into the problem and find out whay layer is the issue. For example, print the layer’s name when enter into the translate function or whatever method you like.

OK , thank you very much