Currently, Intel and ARM have different conv2d FP32 schedules. I tried Intel x86 schedules on ARM Rasp4 device. My hypothesis was that, if we are not using tensorize, the schedules should be reusable and the one that does better data reuse utilization and prefetcher-friendly accesses should perform better on both Intel and x86 devices (given LLVM does the right thing for us).

Intel schedules also perform data layout conversion from NCHW to NCHWc at Relay level, and reuse the data layout across many conv2d, amortizing the cost. ARM NCHW conv2d spatial pack schedule, on the other hand, converts data layout inside the conv2d schedule (to NHWChw and not NCHWc) for each conv2d.

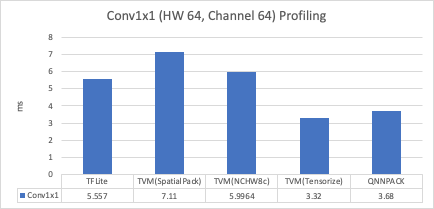

Here, I present the performance comparison between different options. My goal is to bring clarity as to what is the best thing amongst so many options.

Setup

Device - ARM Raspberry Pi4 - 1.5 GHz

Code Changes - https://github.com/apache/incubator-tvm/pull/5334

I used op strategy to enable both Intel depthwise conv and Intel NCWHc conv2d on ARM device. ARM winograd schedule is very powerful, and I let op strategy and AutoTVM choose it whenever it is faster. The networks have been tuned using AutoTVM.

Different Options - I compare the performance between following three options

ARM sch = ARM Conv2d spatial + ARM winograd + x86 DWC

x86 sch = x86 conv2d + ARM winograd + x86 DWC

Both sch = Choose the best kernel from the full tuning log

Best = Choose the best latency amongst above 3 options

Evaluation

| Network | ARM sch (ms) | x86 sch (ms) | Both sch (ms) | Best sch |

|---|---|---|---|---|

| mobilenet-v1 | 90.72 | 72.46 | 84.79 | x86 sch |

| mobilenet-v2 | 70.56 | 57.39 | 62.1 | x86 sch |

| inception-v3 | 697.03 | 587.59 | 639.4 | x86 sch |

| inception-v4 | 1825.56 | 1271.27 | 1548.91 | x86 sch |

| inception-resnet-v2 | 2163.43 | 1158.03 | 1866.85 | x86 sch |

| squeezenet | 103.84 | 92.24 | 94.44795 | x86 sch |

I also compared against TFLite. TFlite is built from source and I use 4 threads for measuring performance.

| Network | TVM Best (ms) | TFLite (ms) | Speedup over TFLite |

|---|---|---|---|

| mobilenet-v1 | 72.46 | 157 | 2.16671 |

| mobilenet-v2 | 57.39 | 128 | 2.23035 |

| inception-v3 | 587.59 | 1030 | 1.75292 |

| inception-v4 | 1271.27 | 2055 | 1.61649 |

| inception-resnet-v2 | 1158.03 | 2030 | 1.75298 |

| squeezenet | 92.24 | 200 | 2.16826 |

As @FrozenGene suggested, I am also adding comparison against single thread

| Network | TVM Best (ms) | TFlite (ms) | Speedup over TFLite |

|---|---|---|---|

| mobilenet-v1 | 232.55 | 279 | 1.19974 |

| mobilenet-v2 | 151.49 | 179 | 1.1816 |

| inception-v3 | 2020.66 | 2673 | 1.32284 |

| inception-v4 | 4530.12 | 5552 | 1.22557 |

| inception-resnet-v2 | 4075.64 | 5041 | 1.23686 |

| squeezenet | 301.6 | 439 | 1.45557 |

Observations

- Intel x86 schedules perform best amongst all the options.

- Contrary to expectations, “Both sch” that chooses the best kernel performs worse than x86 sch. I think the main reason is that mixing Intel x86 and ARM conv2d pack schedule lead to a large number of layout transforms.

- ARM winograd performance is awesome. Maybe we should try it on Intel x86 in a separate PR. However, it apparently has high memory footprint as it fails runtime for resnet due to Out of Memory error. Removing winograd works.

Discussion points/Next Steps

-

Does it make sense to enable x86 schedules for ARM? And disable conv2d NCHW spatial pack schedule? If we just add more schedule options w/o disabling anything, AutoTVM tuning time goes up significantly.

-

TFLite graphs initially have NHWC data layout. I call

ConvertLayoutto first convert it to NCHW. Then, AlterOpLayout internally converts it to NCHWc. There was an effort to directly improve the performance of NHWC schedule for ARM some time back, but it seems it has been put on hold. Till we don’t have a performant NHWC schedule, does it make sense to change the AutoTVM TFLite tutorial to useConvertLayout.

@tqchen @jackwish @FrozenGene @merrymercy @thierry @masahi @yzhliu