Dear all,

Hi, I’m trying to make a example code that shows how Relay IR becomes LLVM IR.

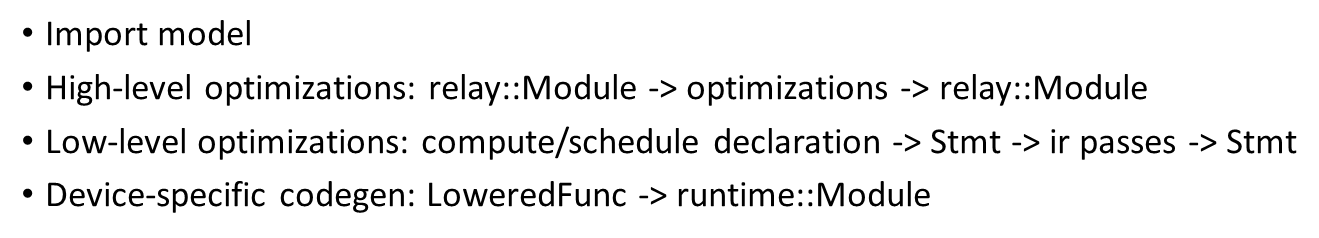

I managed to get LLVM IR, but can’t understand the relationship between Relay IR and TensorExpression IR.

I’m following the tvm/tests/relay_build_module_test.cc with adding some Relay optimization passes.

In order to understand the relation between those two, I want to obtain lowered funcs.

auto pfb = tvm::runtime::Registry::Get("relay.build_module._BuildModule");

tvm::runtime::Module build_mod = (*pfb)();

// Bool for query_imports

auto build_f = build_mod.GetFunction("build", false);

auto json_f = build_mod.GetFunction("get_graph_json", false);

auto mod_f = build_mod.GetFunction("get_module", false);

// (TODO) Retrieve lowered functions

Is there anyone who have gone through this?

Thanks,

Jake