Hi all,

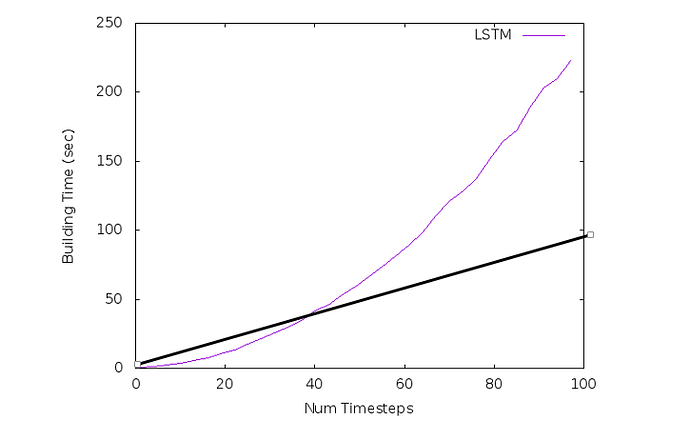

recently I was running huge model which I was mentioned in this topic [TVM Compiler] “stack overflow” happens on Linux x64 when computation graph is “big”.

I’ve noticed that TVM spends approximately ~30% of compilation time inside some part of the scheduler tvm::schedule::MakeBoundCheck.

Seems like some efforts could be applied to improve compilation speed, if someone is interesting in this area.

BTW, I’m not able to upload file with callgraph to this forum, here is a link https://github.com/denis0x0D/ml_models/blob/master/call_graph.png

The steps to reproduce manually:

$ valgrind --tool=callgrind python3 lstm.py

$ kcachegrind callgrind.out.number

Thanks.