Hello, i’m not sure what am I missing here but I am trying to compare the inference performance between tvm and simple mxnet resnet-18. I have Autotuned the model and it has some performance increase but it is still slower than the simple Mxnet model. I am getting 76 ms for the tvm auto-tuned model and 9 ms for the simple mxnet model. Can some one please help me?

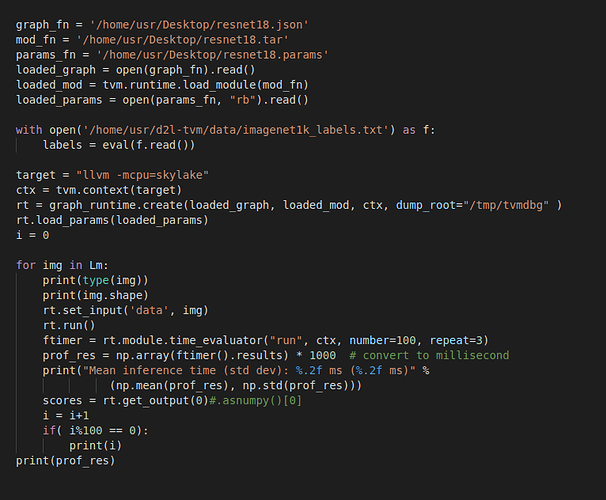

This is the code for loading auto-tuned model

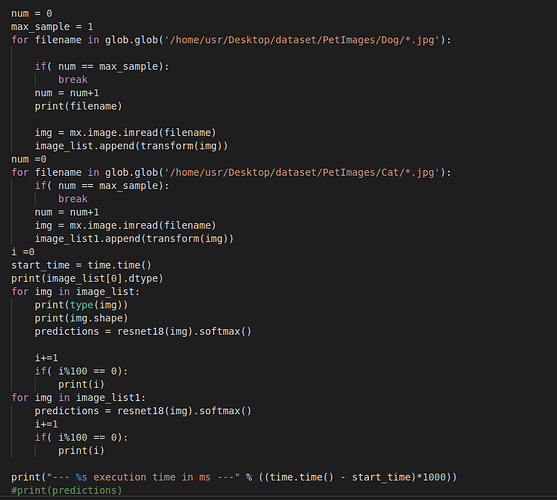

This is the mxnet code:

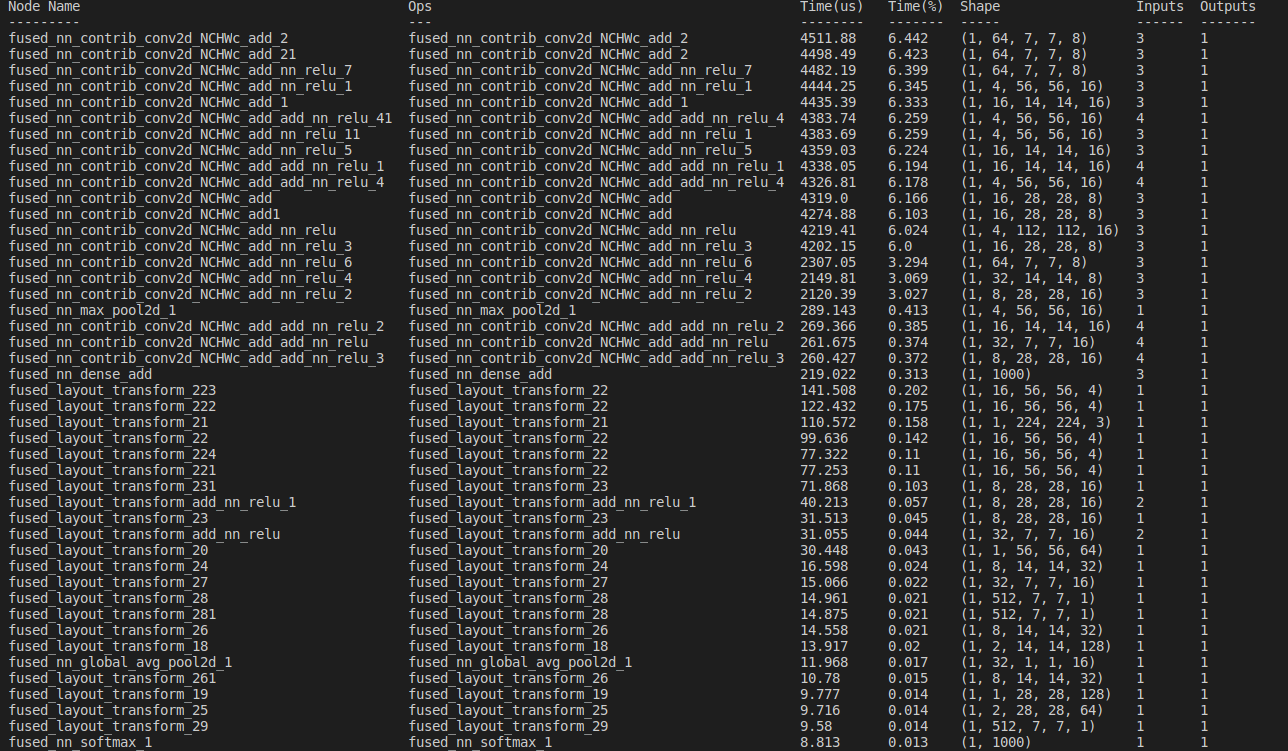

I have attached the graph debug output:

Preformatted text