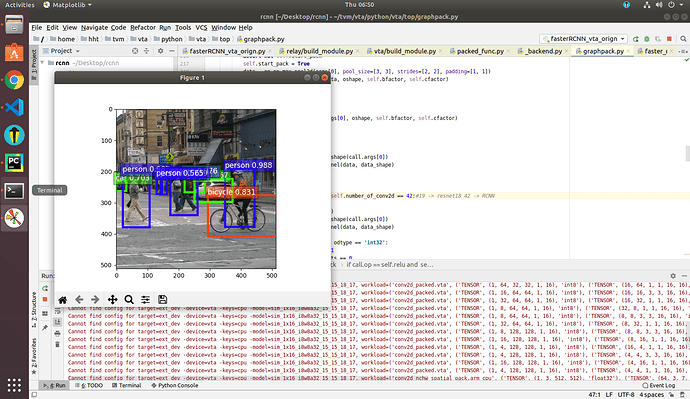

Hi, these days I have been working on deploying Faster R-CNN on VTA. Thanks to the versatility of schedule [TOPI] Using x86 schedules for ARM conv2d. Most llvm op strategy can be used on VTA.

But using generic argsort strategy on VTA has a small problem, I have proposed a preliminary solution at runtime. Solving this problem at compile time is beyond my ability.

Argsort Strategy Problem

It is a problem caused by memory allocate strategy. VTA use VTABufferAlloc to get a vta::DataBuffer pointer. But this pointer is misused as a data virtual address.

By printing the lower schedule, I guess somehow the pass cannot tranform the arguments in extern function. If tvm.contrib.sort.argsort_nms can use compute_ptr, valid_count_ptr, argsort_nms_cpu_ptr as arguments, this problem will be solved at complie time.

PrimFunc([data, valid_count, hybrid_nms.v1]) attrs={"tir.noalias": (bool)1, "global_symbol": "test_nms"} {

...

let valid_count_ptr = VTABufferCPUPtr(tvm_thread_context(VTATLSCommandHandle()), valid_count)

let data_ptr = VTABufferCPUPtr(tvm_thread_context(VTATLSCommandHandle()), data)

// attr [compute] storage_scope = "global"

allocate compute[float32 * 300]

let compute_ptr = VTABufferCPUPtr(tvm_thread_context(VTATLSCommandHandle()), compute)

// attr [argsort_nms_cpu] storage_scope = "global"

allocate argsort_nms_cpu[int32 * 50]

let argsort_nms_cpu_ptr = VTABufferCPUPtr(tvm_thread_context(VTATLSCommandHandle()), argsort_nms_cpu)

...

for (j, 0, 50) {

compute_ptr[j] = data_ptr[((j*6) + 1)]

}

// attr [0] extern_scope = 0

tvm_call_packed("tvm.contrib.sort.argsort_nms", tvm_stack_make_array(compute, tvm_stack_make_shape(1, 50), 0, 2, 0f, 0),

tvm_stack_make_array(valid_count, tvm_stack_make_shape(1), 0, 1, 0, 0),

tvm_stack_make_array(argsort_nms_cpu, tvm_stack_make_shape(1, 50), 0, 2, 0, 0), 1, (bool)0)

...

My Workaround

It seems that using VTABufferCPUPtr to transform the DataBuffer pointer into data virtual address would solve this problem at runtime. I just choose an simpler method, using reinterpret_cast to get the data virtual address.

TVM_REGISTER_GLOBAL("tvm.contrib.sort.argsort_nms")

.set_body([](TVMArgs args, TVMRetValue *ret) {

DLTensor *input = args[0];

DLTensor *sort_num = args[1];

DLTensor *output = args[2];

int32_t axis = args[3];

bool is_ascend = args[4];

auto dtype = input->dtype;

auto data_ptr_tmp = static_cast<int32_t *>(input->data);

auto data_ptr = reinterpret_cast<float *>(*data_ptr_tmp);

auto sort_num_ptr_tmp = static_cast<int32_t *>(sort_num->data);

auto sort_num_ptr = reinterpret_cast<int32_t *>(*sort_num_ptr_tmp);

auto output_data_ptr_tmp = static_cast<int32_t *>(output->data);

auto output_data_ptr = reinterpret_cast<int32_t *>(*output_data_ptr_tmp);

...

Beacuse NMS Strategy use this extern function, I can run NMS on VTA now. Here is my code.

from __future__ import absolute_import, print_function

import os

import time

import numpy as np

import vta

import tvm

import topi

from tvm import te

from tvm import rpc, autotvm, relay

from vta.testing import simulator

assert tvm.runtime.enabled("rpc")

env = vta.get_env()

# Set ``device=arm_cpu`` to run inference on the CPU

# or ``device=vta`` to run inference on the FPGA.

device = "vta"

target = env.target if device == "vta" else env.target_vta_cpu

if env.TARGET not in ["sim", "tsim"]:

# Get remote from tracker node if environment variable is set.

# To set up the tracker, you'll need to follow the "Auto-tuning

# a convolutional network for VTA" tutorial.

tracker_host = os.environ.get("TVM_TRACKER_HOST", None)

tracker_port = os.environ.get("TVM_TRACKER_PORT", None)

# Otherwise if you have a device you want to program directly from

# the host, make sure you've set the variables below to the IP of

# your board.

device_host = os.environ.get("VTA_PYNQ_RPC_HOST", "192.168.2.99")

device_port = os.environ.get("VTA_PYNQ_RPC_PORT", "9091")

if not tracker_host or not tracker_port:

remote = rpc.connect(device_host, int(device_port))

else:

remote = autotvm.measure.request_remote(env.TARGET,

tracker_host,

int(tracker_port),

timeout=10000)

# Reconfigure the JIT runtime and FPGA.

# You can program the FPGA with your own custom bitstream

# by passing the path to the bitstream file instead of None.

reconfig_start = time.time()

vta.reconfig_runtime(remote)

vta.program_fpga(remote, bitstream=None)

reconfig_time = time.time() - reconfig_start

print("Reconfigured FPGA and RPC runtime in {0:.2f}s!".format(reconfig_time))

# In simulation mode, host the RPC server locally.

else:

remote = rpc.LocalSession()

# Get execution context from remote

ctx = remote.ext_dev(0) if device == "vta" else remote.cpu(0)

dshape = (1, 50, 6)

data = te.placeholder(dshape, name="data")

valid_count = te.placeholder((dshape[0],), dtype="int32", name="valid_count")

iou_threshold = 0.7

force_suppress = True

top_k = -1

out = topi.vision.nms.non_max_suppression(data, valid_count, iou_threshold=iou_threshold,

force_suppress=force_suppress, top_k=top_k)

np_data = np.random.random(dshape).astype(np.float32)

np_valid_count = np.array([8]).astype(np.int32)

s = topi.generic.schedule_nms(out)

with vta.build_config(disabled_pass={"AlterOpLayout"}):

m = tvm.lower(s, [data, valid_count, out], name="test_nms")

print(m)

f = tvm.build(m,target = target,target_host=env.target_host)

tvm_data = tvm.nd.array(np_data, ctx)

tvm_valid_count = tvm.nd.array(np_valid_count, ctx)

tvm_out = tvm.nd.array(np.zeros(dshape[:2], dtype=np.int32), ctx)

f(tvm_data, tvm_valid_count, tvm_out)

print(tvm_out)