Hi @liangfu,

The simulation output here is:

tvm/vta/tutorials/frontend > python deploy_vision_on_vta.py resnet18_v1 inference graph built in 57.55s! File synset.txt exists, skip.

Execution statistics: cycle_count : 29953046

resnet18_v1 prediction for sample 0 #1: punching bag, punch bag, punching ball, punchball #2: chain mail, ring mail, mail, chain armor, chain armour, ring armor, ring armour #3: panpipe, pandean pipe, syrinx #4: grocery store, grocery, food market, market #5: mailbag, postbag Traceback (most recent call last):

File “deploy_vision_on_vta.py”, line 287, in assert(cat_detected)

AssertionError

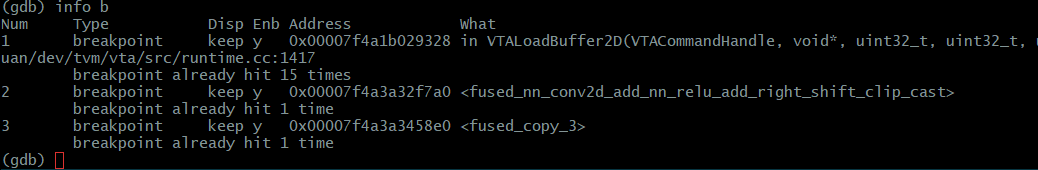

Could you kindly let me know the steps to debug this issue?

Best regards.

Kevin

!

! !) , but unfortunately both test_benchmark_topi_conv2d and deploy_vision_on_vta still fail. I mean they complete with fail status. The deploy_vision returns a beagle …

!) , but unfortunately both test_benchmark_topi_conv2d and deploy_vision_on_vta still fail. I mean they complete with fail status. The deploy_vision returns a beagle …