model_graph_opt.log is like this:

{“i”: [“llvm -mcpu=broadwell”, “topi_x86_conv2d_NCHWc”, [[“TENSOR”, [1, 3, 112, 112], “float32”], [“TENSOR”, [64, 3, 3, 3], “float32”], [2, 2], [1, 1], [1, 1], “NCHW”, “float32”], {}, [“conv2d”, [1, 3, 112, 112, “float32”], [64, 3, 3, 3, “float32”], [2, 2], [1, 1], [1, 1], “NCHW”, “float32”], {“i”: 24, “t”: “direct”, “c”: null, “e”: [[“tile_ic”, “sp”, [3, 1]], [“tile_oc”, “sp”, [2, 32]], [“tile_ow”, “sp”, [28, 2]], [“unroll_kw”, “ot”, true]]}], “r”: [[0.00022216637243020992], 0, 2.0850822925567627, 1557991675.785746], “v”: 0.1}

{“i”: [“llvm -mcpu=broadwell”, “topi_x86_conv2d_NCHWc”, [[“TENSOR”, [1, 64, 56, 56], “float32”], [“TENSOR”, [64, 64, 1, 1], “float32”], [1, 1], [0, 0], [1, 1], “NCHW”, “float32”], {}, [“conv2d”, [1, 64, 56, 56, “float32”], [64, 64, 1, 1, “float32”], [1, 1], [0, 0], [1, 1], “NCHW”, “float32”], {“i”: 432, “t”: “direct”, “c”: null, “e”: [[“tile_ic”, “sp”, [2, 32]], [“tile_oc”, “sp”, [2, 32]], [“tile_ow”, “sp”, [56, 1]], [“tile_oh”, “ot”, 2]]}], “r”: [[0.00047231593158072126], 0, 3.1119916439056396, 1557989850.5378458], “v”: 0.1}

{“i”: [“llvm -mcpu=broadwell”, “topi_x86_depthwise_conv2d_NCHWc_from_nchw”, [[“TENSOR”, [1, 64, 56, 56], “float32”], [“TENSOR”, [64, 1, 3, 3], “float32”], [1, 1], [1, 1], [1, 1], “float32”], {}, [“depthwise_conv2d_nchw”, [1, 64, 56, 56, “float32”], [64, 1, 3, 3, “float32”], [1, 1], [1, 1], [1, 1], “float32”], {“i”: 89, “t”: “direct”, “c”: null, “e”: [[“tile_ic”, “sp”, [2, 32]], [“tile_oc”, “sp”, [2, 32]], [“tile_ow”, “sp”, [28, 2]]]}], “r”: [[0.00027373629171905875], 0, 3.663689613342285, 1557991313.0582528], “v”: 0.1}

{“i”: [“llvm -mcpu=broadwell”, “topi_x86_conv2d_NCHWc”, [[“TENSOR”, [1, 64, 56, 56], “float32”], [“TENSOR”, [64, 64, 1, 1], “float32”], [1, 1], [0, 0], [1, 1], “NCHW”, “float32”], {}, [“conv2d”, [1, 64, 56, 56, “float32”], [64, 64, 1, 1, “float32”], [1, 1], [0, 0], [1, 1], “NCHW”, “float32”], {“i”: 432, “t”: “direct”, “c”: null, “e”: [[“tile_ic”, “sp”, [2, 32]], [“tile_oc”, “sp”, [2, 32]], [“tile_ow”, “sp”, [56, 1]], [“tile_oh”, “ot”, 2]]}], “r”: [[0.00047231593158072126], 0, 3.1119916439056396, 1557989850.5378458], “v”: 0.1}

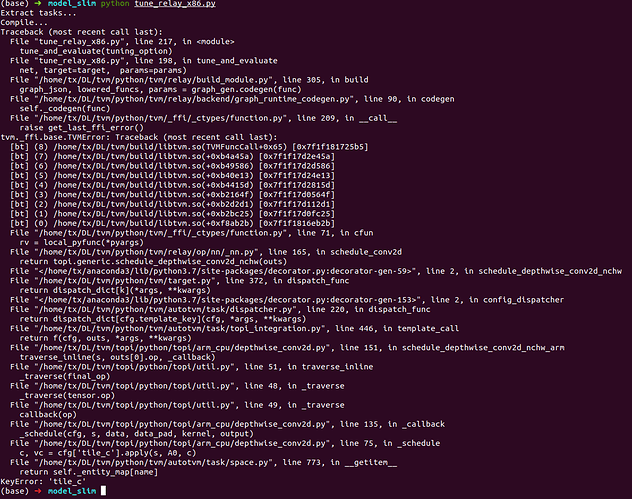

“tile_k” is not in this log.