So no solution to this issue for now. Maybe I should use pillow instead of opencv. Thanks.

Would be good if someone can investigate if this is an issue with tvm

Instead of cv2, use imageio to read videos and PIL.Image to resize images.

still get the same problem.

Remove relay.build_module.build: read video frame cost 2ms, resize image cost 2ms.

Keep relay.build_module.build: read video frame cost 7ms, resize image cost 1.5ms.

Maybe you could try one quick workaround: rebuild TVM with config.cmake set(USE_OPENMP gnu)

it works.  Thanks

Thanks

You can also try setting TVM_BIND_THREADS as mentioned in Auto-tuning too slow

they might be the same issue

it works, too

it works, too

Can you explain how you set TVM_BIND_THREADS so that we can look into the issue?

Server 1: haven’t rebuilt tvm. before running python darknet_evaluate.py, set env var export TVM_NUM_THREADS=8 & export TVM_BIND_THREADS=8, then i get 80-90 fps.

Server 2: rebuild tvm with set(USE_OPENMP gnu),then i get 90-100fps.

I think the reason is exclude_worker0. We default set it be true and will bind the master thread to core 0. When OpenCV thread enter into, then I find core0 is 100% very long time.

So I think better way is set exclude_worker0 to be false default.

// if excluding worker 0 and using master to run task 0

#ifndef _LIBCPP_SGX_CONFIG

bool exclude_worker0_{true};

#else

bool exclude_worker0_{false};

#endif

change into

bool exclude_worker0_{false};

cc @yidawang

We set exclude_worker0_ to be true by default because in most of the cases the master has not much to do. In your case if you have heavy work in the master, you can manually set it to be false. BTW, I think we should have a way to specify this value via an ENV VAR.

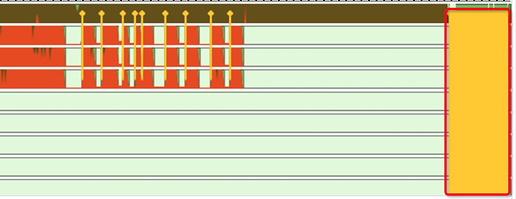

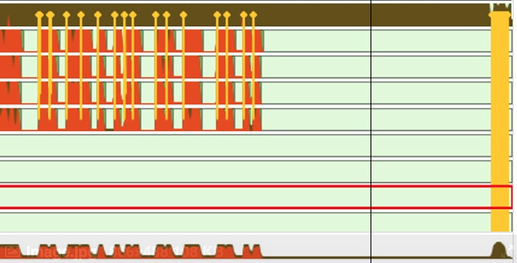

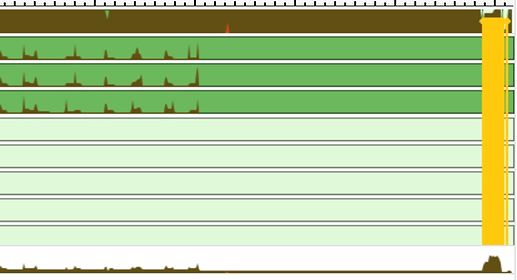

I have used VTune to analyze between binding task 0 to master or not.

-

Bind task 0 to master, thread transitions occupy much time.

Yellow is the thread transitions part

-

Don’t bind task 0 to master

-

OMP

So yes, we should add env var to do it. For example, we could add TVM_EXCLUDER_WORKER0 to handle it. Then if users pass TVM_EXCLUDER_WORKER0=0, we change the value be false. How about this solution?

A common use case is auto tuning. If we quantize a model (which starts threadpool during constant folding), and then extract tasks and tune them, it will be very slow because only thread0 is used instead of every thread. So it is necessary to let users to know

@vinx13 @yidawang would you mind I opening one PR to get the environment TVM_EXCLUDER_WORKER0 value? I think it is common to meet this problem when to use OpenCV or auto tuning said by @vinx13. We should have one way to solve it.

Sure that would be helpful. But for the auto tuning case, this seems still a little painful, as users are likely to forget this

@vinx13 I agree with you. At lease 2 cases we need it.

- OpenCV + TVM at this case, which is common when we use TVM deploy CNN models in production

- Auto Tuning

I prefere change the default value of exclude_worker0_, i.e. bool exclude_worker0_{false}; then we expose one environment of TVM_EXCLUDER_WORKER0, when we meet 1, we change it to be true. I think it is more reasonable.

How about this?

cc @yidawang

Am I missing something? Why exclude_worker0_ would lead to “only thread0 is used instead of every thread”?

I am fine with adding the ENV VAR. Please feel free to submit the PR

In AutoTVM, the default behavior is to use multiprocessing.Pool to use all cpu cores. However, if we start a tvm threading backend before running autotvm, cpu affinity will be changed. As a result, all processes created by mp.Pool will inherit the affinity.