Will TVM support variable input shape for code generation in future? In NMT and ASR, the network input size are not constant, it will be wonderful if TVM can support variable input length.

@tqchen

Interesting topic to discuss and research …

tvm already support variable input length.You can use tvm.var('m') to setup the the input shape instead of constant, see http://docs.tvmlang.org/tutorials/get_started.html#sphx-glr-tutorials-get-started-py

This works well for most operators, for performance critical operators, we still need a bit effort in making the perf as good as constant shape ones

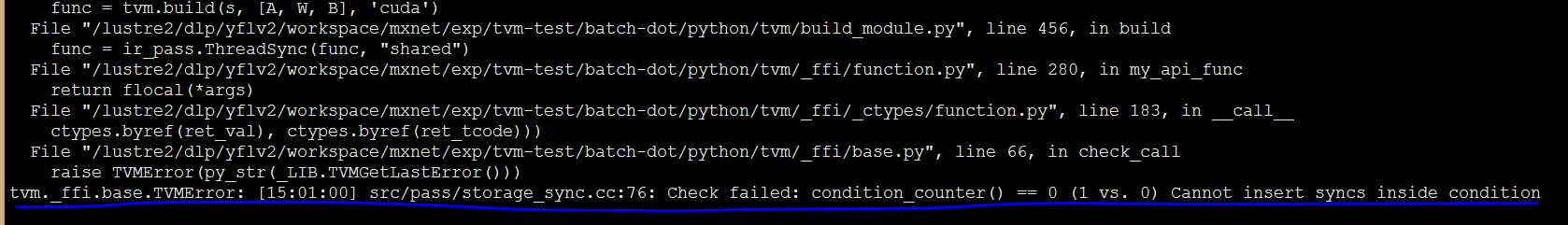

Thx. According to the tutorial, tvm.var can setup the input shape. However when I want to use it to generate cuda code, it occurs the following error.

This error can be reproduced by changing

https://github.com/dmlc/tvm/blob/master/tutorials/optimize/opt_conv_cuda.py:28

to

batch = tvm.var('batch')Is there any way to solve that ?

This is a limitation in current variable length support when the split generates conditions. We will need more improvement in this direction. In the meanwhile, it might still be helpful to generate a few kernels for each series of batch size(like bucketing) statically and optimize those

@tqchen Thx, bucket can be helpful, but is not very convenient when both the batch-size and image-size are changing (too many combination). It also needs extra padding to the input. Is there any plan to improve the variable length support in near future?

HI, is there any progression or schedule in variable length support ?

@tqchen hi, is there any plan to improve the support of variable length input? It is an import feature for inference application.

Hi, it seems we are working on something very similar, any progress? or we can discuss this privately?

No, I’m waiting for the tvm to support this feature. Currently, we still use cudnn/cublas in our inference application