I am trying to use the templates which are implemented by tvm to tune single operators. I use the code mentioned in this discussion. The code is:

import os

import numpy as np

import tvm

from tvm import te

from tvm import autotvm

from tvm import relay

import tvm.relay.testing

from tvm.autotvm.tuner import XGBTuner, GATuner, RandomTuner, GridSearchTuner

from tvm.contrib.util import tempdir

import tvm.contrib.graph_runtime as runtime

target = tvm.target.cuda()

N, H, W, CO, CI, KH, KW, strides, padding = 1, 7, 7, 512, 512, 3, 3, (1, 1), (1, 1)

data = te.placeholder((N, CI, H, W), name='data')

kernel = te.placeholder((CO, CI, KH, KW), name='kernel')

dilation=1

dtype = "float32"

kernel_shape = (CO, CI, KH, KW)

ctx = tvm.gpu()

out = relay.nn.conv2d(data, kernel, strides=strides, padding=padding, dilation=dilation, channels = CO, kernel_size = (KH, KW), data_layout='NCHW',out_layout='NCHW', out_dtype=dtype)

mod = relay.Module.from_expr(out)

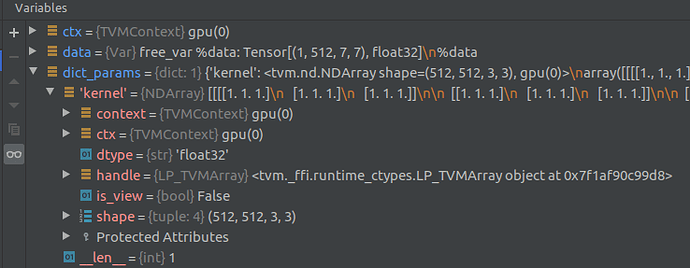

kernel_weights = tvm.nd.array(np.ones(kernel_shape, dtype=dtype), ctx)

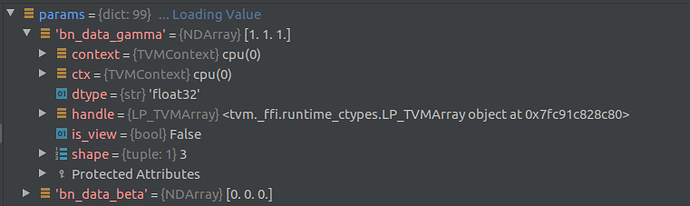

dict_params = {'kernel': kernel_weights}

task = autotvm.task.extract_from_program(mod["main"], target=target, params=dict_params, ops=(relay.op.nn.conv2d,))

The output is:

Traceback (most recent call last):

File "tune_single_op_builtin_tmp.py", line 25, in <module>

out = relay.nn.conv2d(data, kernel, strides=strides, padding=padding, dilation=dilation, channels = CO, kernel_size = (KH, KW), data_layout='NCHW',out_layout='NCHW', out_dtype=dtype)

File "/usr/tvm/python/tvm/relay/op/nn/nn.py", line 209, in conv2d

kernel_layout, out_layout, out_dtype)

File "/usr/tvm/python/tvm/_ffi/_ctypes/packed_func.py", line 213, in __call__

raise get_last_ffi_error()

tvm._ffi.base.TVMError: Traceback (most recent call last):

[bt] (3) /usr/tvm/build/libtvm.so(TVMFuncCall+0x65) [0x7fe8d57fab85]

[bt] (2) /usr/tvm/build/libtvm.so(+0x7f1898) [0x7fe8d53d6898]

[bt] (1) /usr/tvm/build/libtvm.so(tvm::RelayExpr tvm::runtime::TVMPODValue_::AsObjectRef<tvm::RelayExpr>() const+0x14b) [0x7fe8d4fd7d5b]

[bt] (0) /usr/tvm/build/libtvm.so(dmlc::LogMessageFatal::~LogMessageFatal()+0x43) [0x7fe8d4f75d63]

File "/usr/tvm/include/tvm/runtime/packed_func.h", line 1456

TVMError: Check failed: ObjectTypeChecker<TObjectRef>: :Check(ptr): Expect relay.Expr but get Tensor

Can anyone help on this?