I run opt_gemm.py building on a cortex-A7 platform(armv7a), using:

target='llvm -device=arm_cpu -model=xxx -target=armv7a-linux-eabi -mattr=+neon,+thumb2 -mfloat-abi=soft’

I also tried the target as arm-linux-gnueabi the same with it’s gcc -v. The llvm version is 8.0.

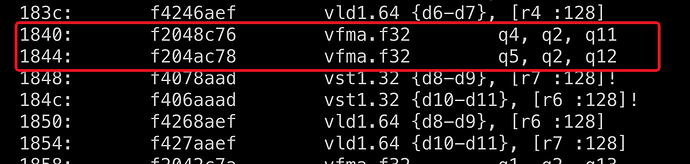

The performance is poor, so i export so library and objdump it, i find there are no vfma.f32 intructions. only see inefficient instructions like vmul,vadd.

In contrast, i write a matmul using c language, and compile it with cross compile gcc and -O3, then i can see vfma.f32 in objdump file.

so what’s wrong with it?