Same error even pull the lastest code at 0ct 7, 2019!

It happens when run to:

timer = m.module.time_evaluator("run", ctx, number=num, repeat=rep)

timer()

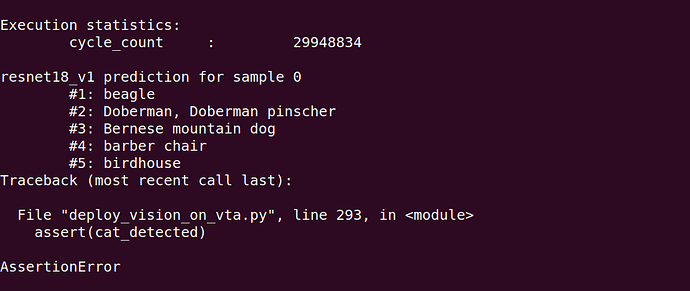

The error is as:

Stack trace:

[bt] (0) /home/sun/.local/lib/python3.6/site-packages/mxnet/libmxnet.so(+0x2b64150) [0x7f8b90894150]

[bt] (1) /lib/x86_64-linux-gnu/libc.so.6(+0x3ef20) [0x7f8ba416ef20]

[bt] (2) /tmp/tmpzoqyk19b/graphlib.o.so(+0x1f27e) [0x7f8b6b6cf27e]

[bt] (3) /home/sun/File/TVM/Projects/tvm/build/libtvm.so(tvm::runtime::ThreadPool::Launch(int (*)(int, TVMParallelGroupEnv*, void*), void*, int, int)+0xfee) [0x7f8b63b3539e]

[bt] (4) /home/sun/File/TVM/Projects/tvm/build/libtvm.so(TVMBackendParallelLaunch+0x63) [0x7f8b63b32e93]

[bt] (5) /tmp/tmpzoqyk19b/graphlib.o.so(+0x1ed2b) [0x7f8b6b6ced2b]

[bt] (6) /tmp/tmpzoqyk19b/graphlib.o.so(fused_nn_conv2d_add_nn_relu+0x3c3) [0x7f8b6b6ce8e3]

[bt] (7) /home/sun/File/TVM/Projects/tvm/build/libtvm.so(+0xbd8210) [0x7f8b63b20210]

[bt] (8) /home/sun/File/TVM/Projects/tvm/build/libtvm.so(+0xc2bfe7) [0x7f8b63b73fe7]

Stack trace:

[bt] (0) /home/sun/.local/lib/python3.6/site-packages/mxnet/libmxnet.so(+0x2b64150) [0x7f8b90894150]

[bt] (1) /lib/x86_64-linux-gnu/libc.so.6(+0x3ef20) [0x7f8ba416ef20]

[bt] (2) /tmp/tmpzoqyk19b/graphlib.o.so(+0x1f27e) [0x7f8b6b6cf27e]

[bt] (3) /home/sun/File/TVM/Projects/tvm/build/libtvm.so(tvm::runtime::ThreadPool::RunWorker(int)+0x157) [0x7f8b63b33947]

[bt] (4) /home/sun/File/TVM/Projects/tvm/build/libtvm.so(std::thread::_State_impl<std::thread::_Invoker<std::tuple<tvm::runtime::threading::ThreadGroup::Impl::Impl(int, std::function<void (int)>, bool)::{lambda()#1}> > >::_M_run()+0x31) [0x7f8b63b36521]

[bt] (5) /usr/lib/x86_64-linux-gnu/libstdc++.so.6(+0xbd66f) [0x7f8b8d73566f]

[bt] (6) /lib/x86_64-linux-gnu/libpthread.so.0(+0x76db) [0x7f8ba3f176db]

[bt] (7) /lib/x86_64-linux-gnu/libc.so.6(clone+0x3f) [0x7f8ba425188f]

terminate called after throwing an instance of 'dmlc::Error'

what(): [15:18:42] /home/sun/File/TVM/Projects/tvm/src/runtime/workspace_pool.cc:116: Check failed: allocated_.size() == 1 (3 vs. 1) :

Stack trace:

[bt] (0) /home/sun/File/TVM/Projects/tvm/build/libtvm.so(tvm::runtime::WorkspacePool::Pool::Release(DLContext, tvm::runtime::DeviceAPI*)+0x7d7) [0x7f8b63b3b527]

[bt] (1) /home/sun/File/TVM/Projects/tvm/build/libtvm.so(tvm::runtime::WorkspacePool::~WorkspacePool()+0x37) [0x7f8b63b39937]

[bt] (2) /lib/x86_64-linux-gnu/libc.so.6(__call_tls_dtors+0x3f) [0x7f8ba41738af]

[bt] (3) /lib/x86_64-linux-gnu/libc.so.6(+0x43117) [0x7f8ba4173117]

[bt] (4) /lib/x86_64-linux-gnu/libc.so.6(+0x4313a) [0x7f8ba417313a]

[bt] (5) /home/sun/.local/lib/python3.6/site-packages/mxnet/libmxnet.so(+0x2b64188) [0x7f8b90894188]

[bt] (6) /lib/x86_64-linux-gnu/libc.so.6(+0x3ef20) [0x7f8ba416ef20]

[bt] (7) /tmp/tmpzoqyk19b/graphlib.o.so(+0x1f27e) [0x7f8b6b6cf27e]

[bt] (8) /home/sun/File/TVM/Projects/tvm/build/libtvm.so(tvm::runtime::ThreadPool::Launch(int (*)(int, TVMParallelGroupEnv*, void*), void*, int, int)+0xfee) [0x7f8b63b3539e]

[1] 3914 abort (core dumped) python3 -m pdb vta/tutorials/frontend/deploy_vision_on_vta.py

Do you have solution or suggestions at it?